Home

Introduction

Table of Contents

- Chapter-1: Getting Started with Microservices

- What are Microservices?

- Microservices vs Monolithic Architectures

- Advantages of Microservices

- Challenges and Considerations

- Key Technologies and Tools

- Microservices Communication

- Domain-Driven Design (DDD)

- Task-1: Identify Microservices for the book

- Task-2: Identify the List of Git Repositories Needed

- Task-3: Create new Azure DevOps Organization

- Task-4: Create new Azure DevOps Project

- Chapter-2: Docker Fundamentals

- Chapter-3: Getting Started with Docker

- Chapter-4: Create Your First Microservice with .NET Core Web API

- Step-1: Create a new repo in azure DevOps

- Step-2: Clone the repo from azure DevOps

- Step-3: Create a new .NET Core Web API project

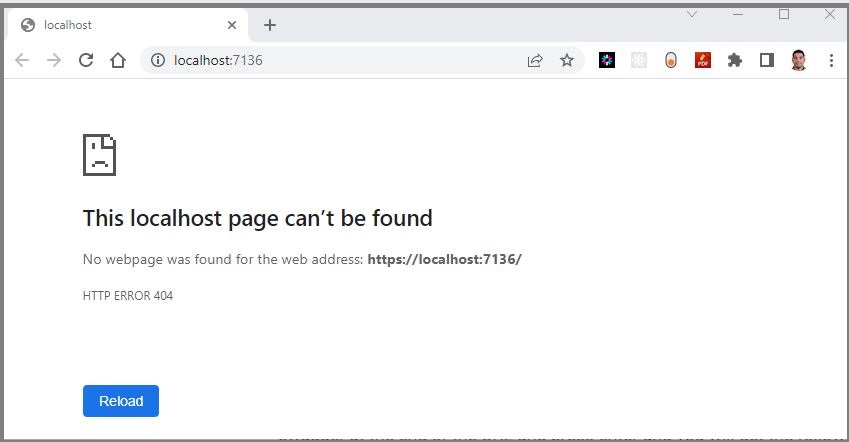

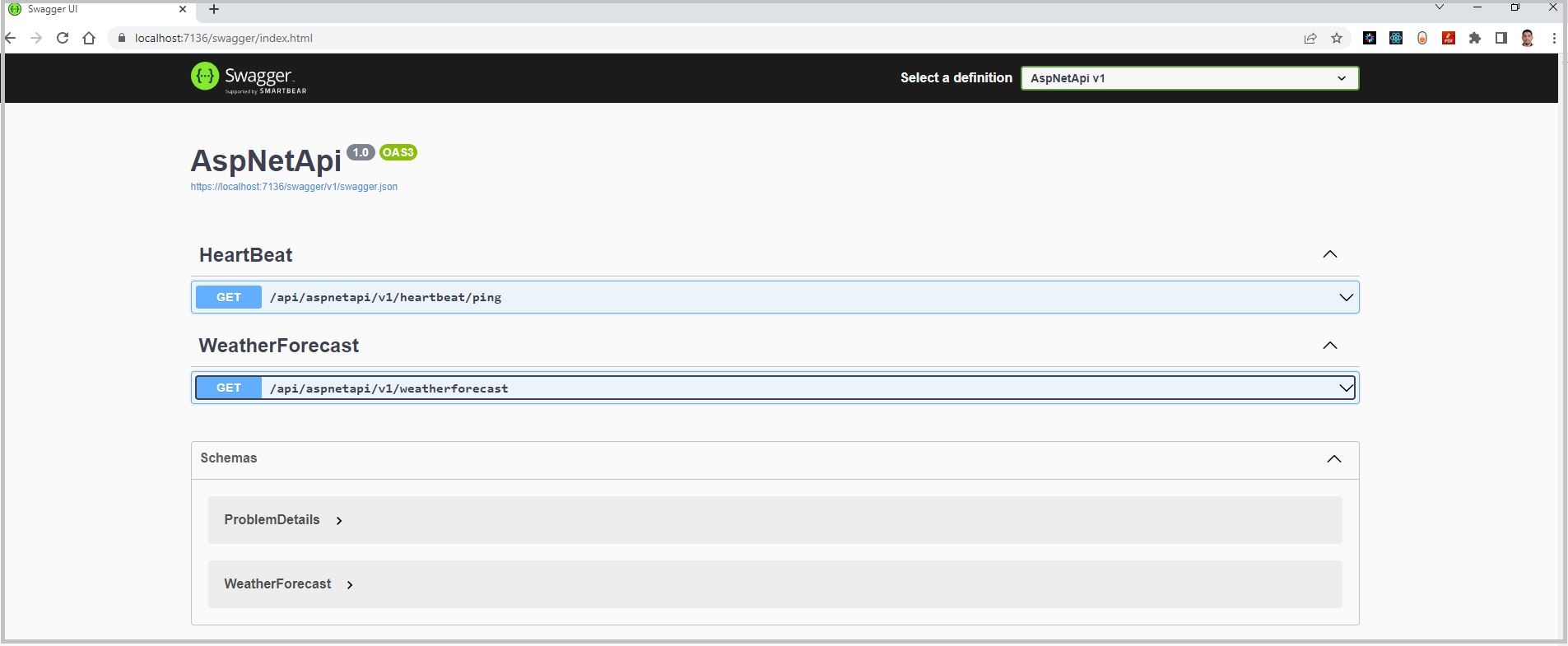

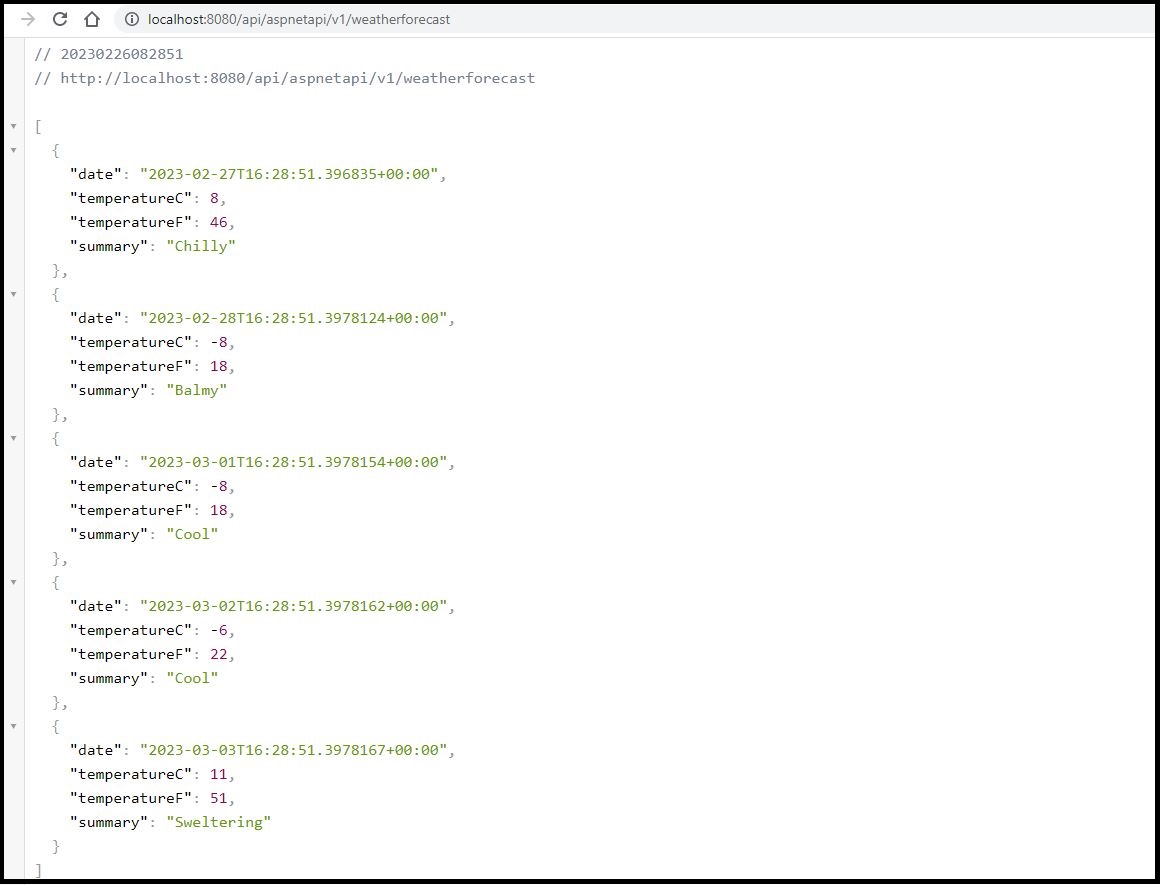

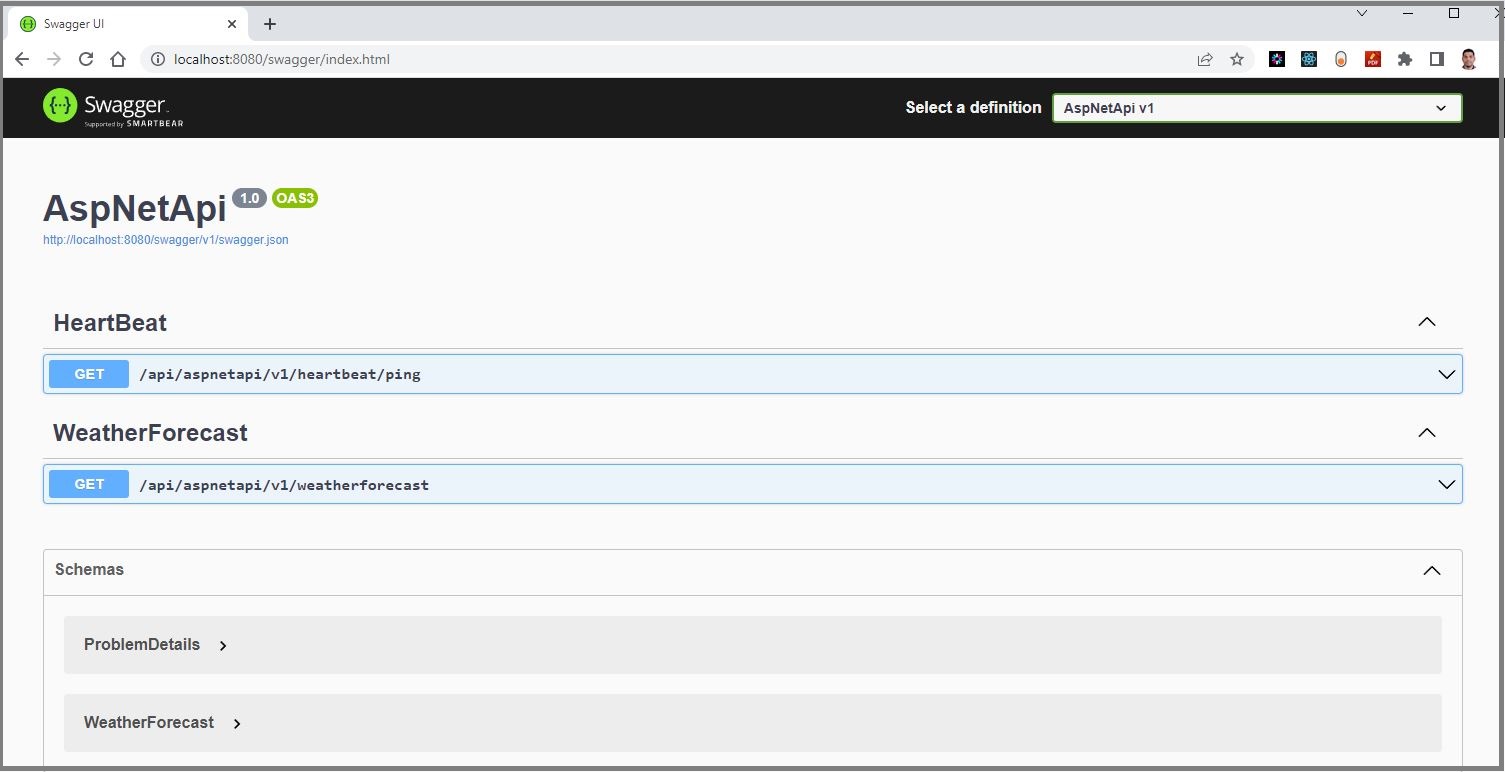

- Step-4: Test the new .NET core Web API project

- Step-5: Add Dockerfiles to the API project

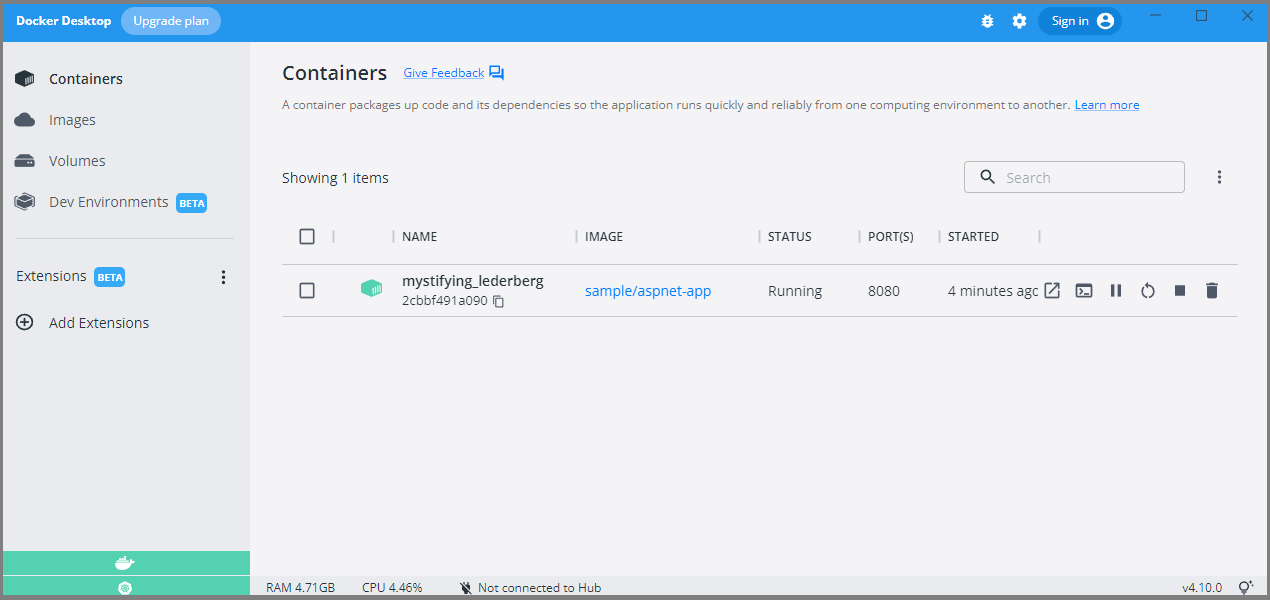

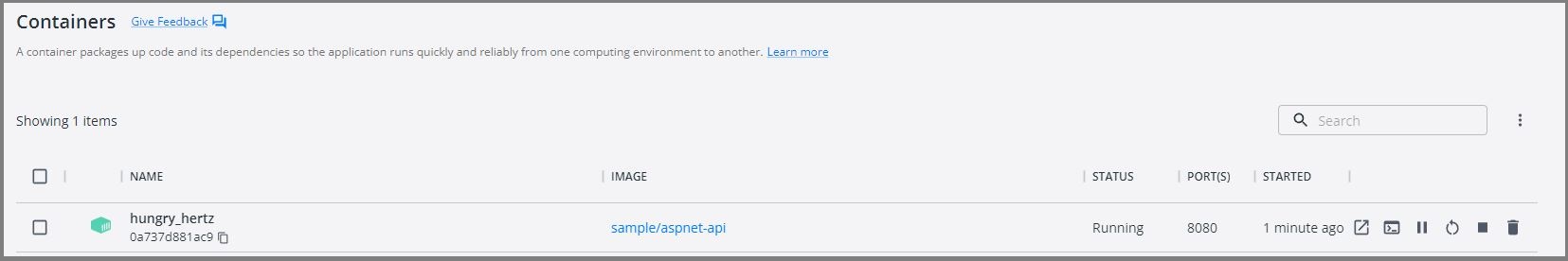

- Step-6: Docker Build & Run

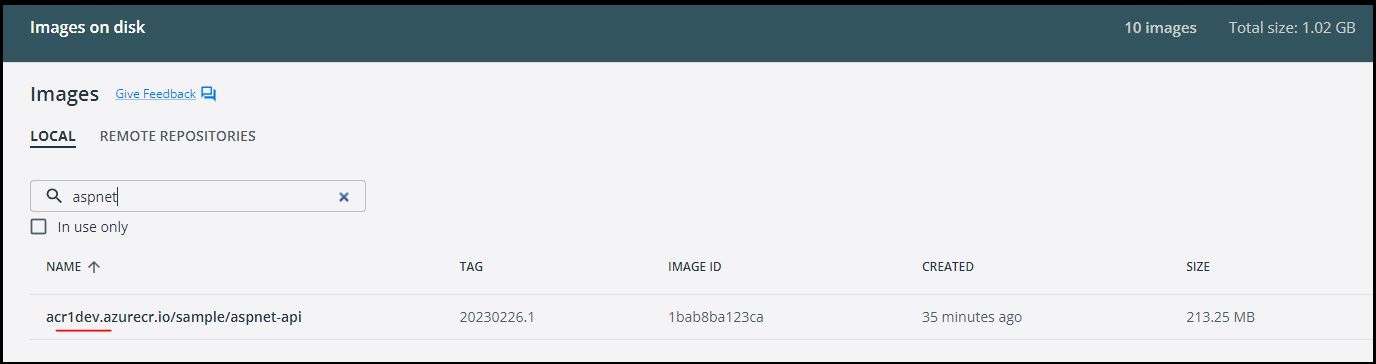

- Step-7: Push docker container to ACR

- Step-8: Pull docker container from ACR

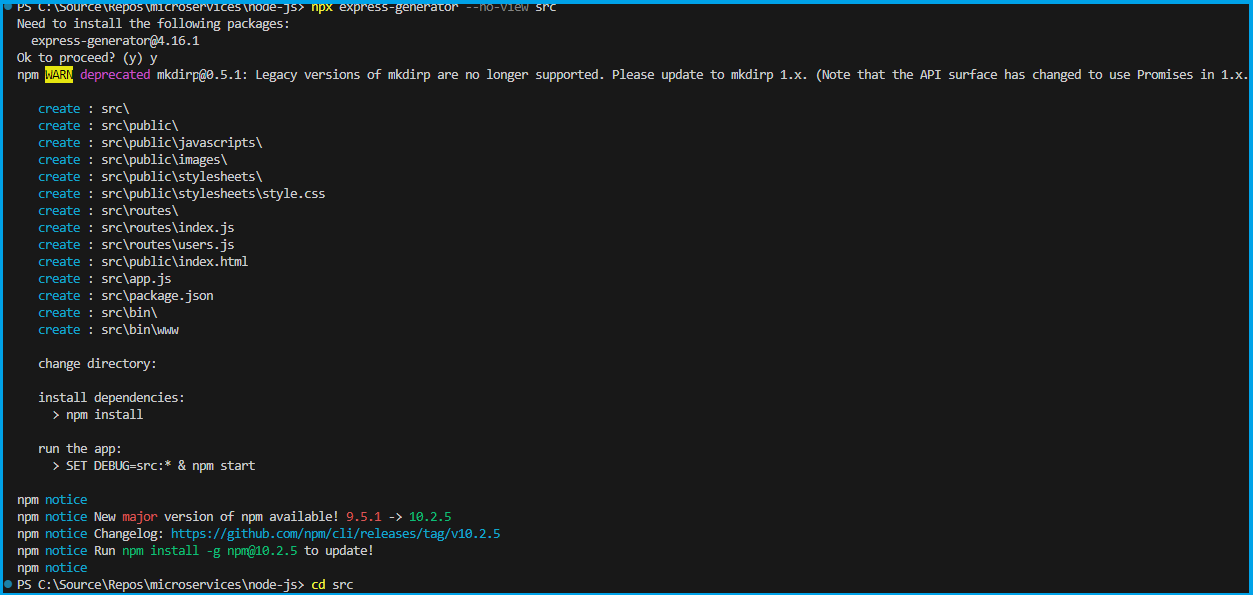

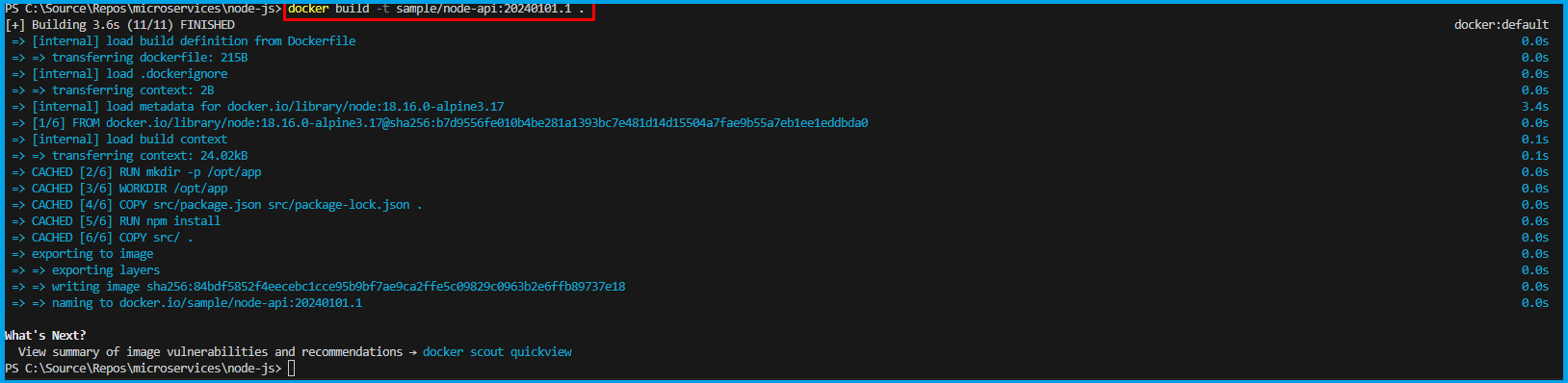

- Chapter-5: Create Your Second Microservice with Node.js

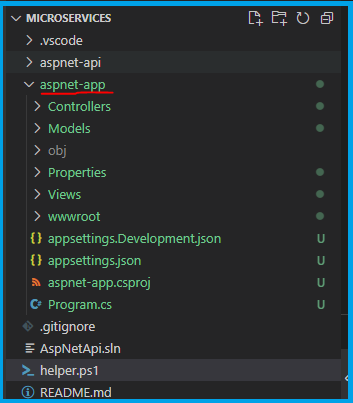

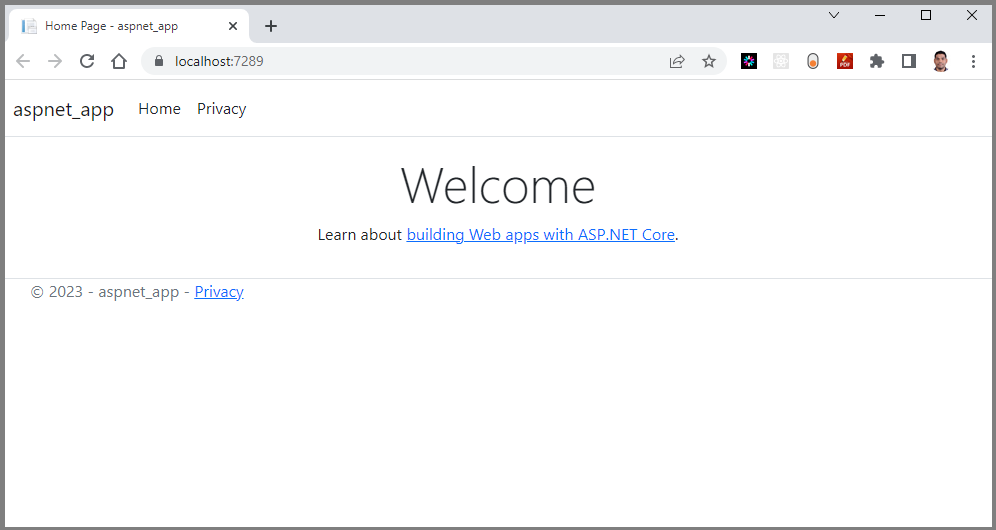

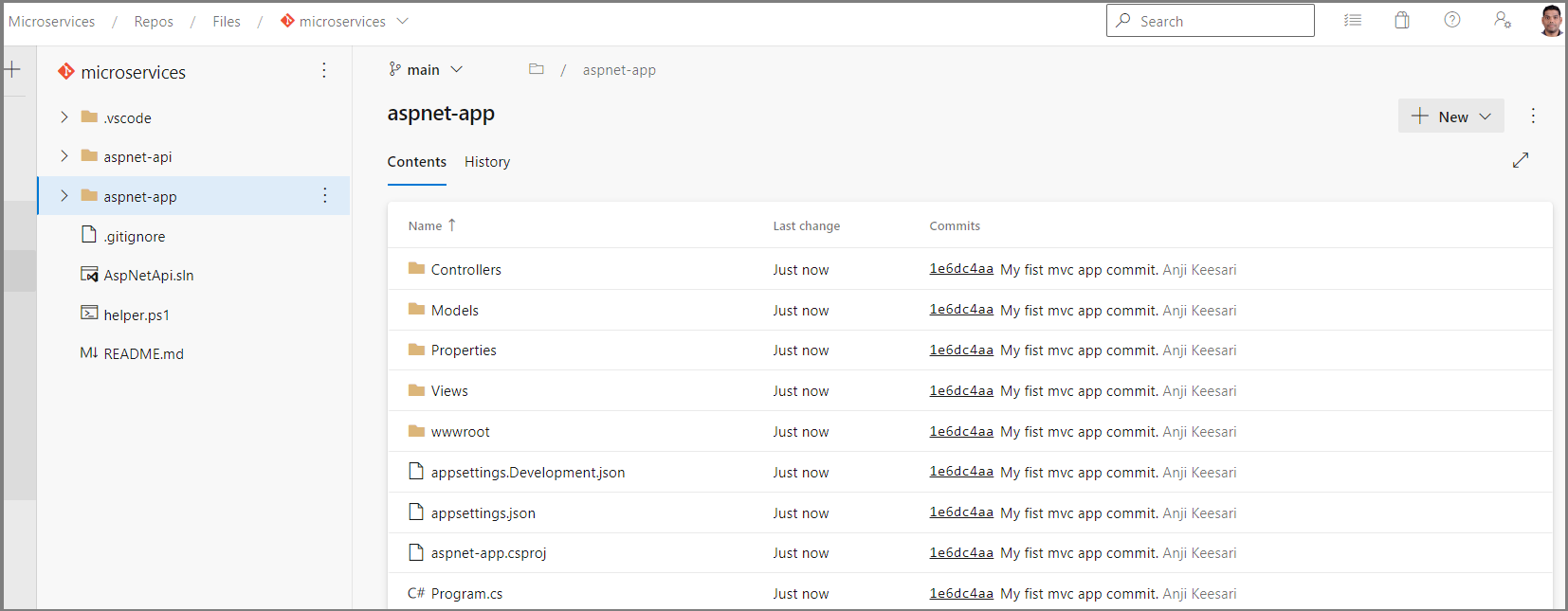

- Chapter-6: Create Your First Website using .NET Core MVC Application

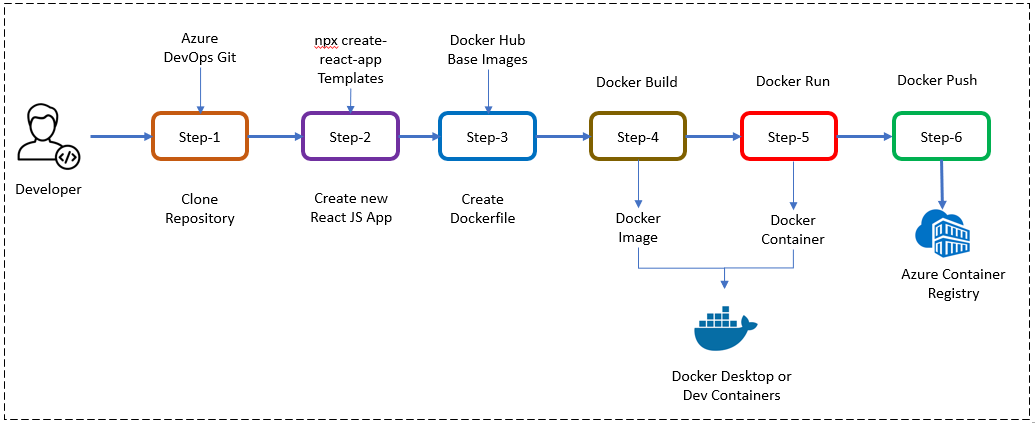

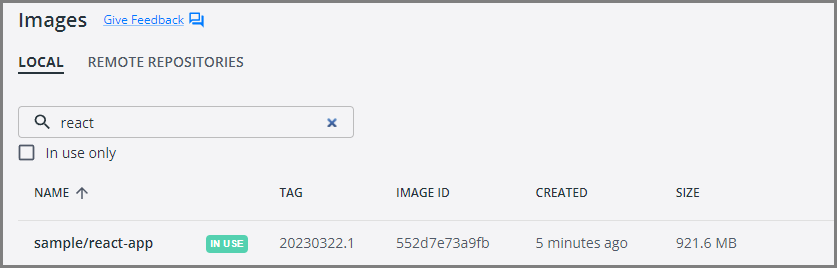

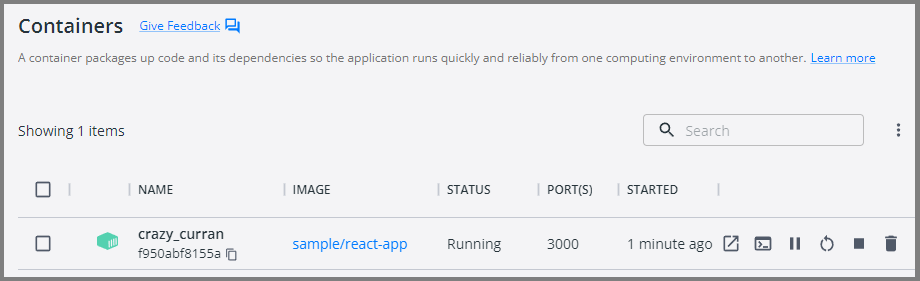

- Chapter-7: Create Your Second Website using React.js

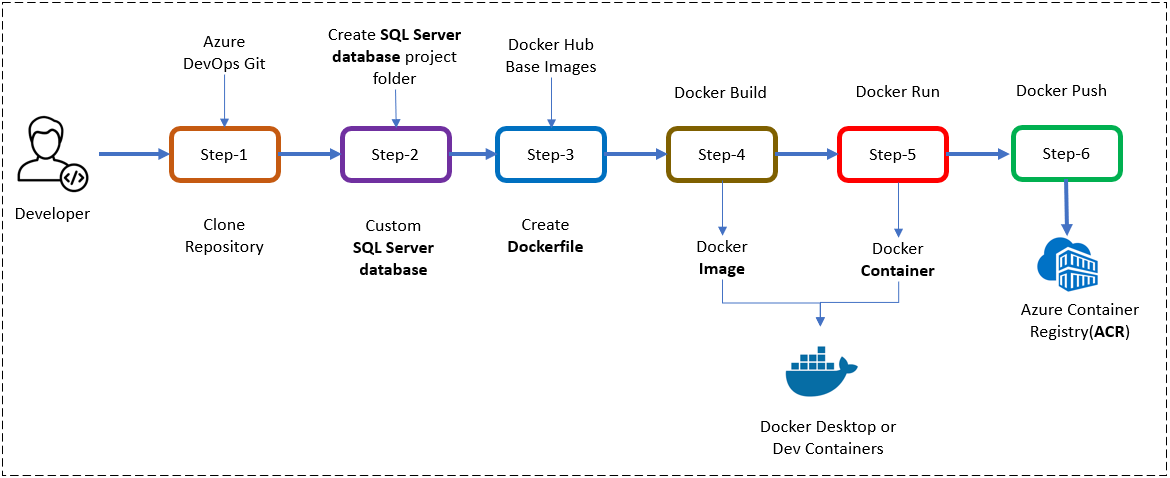

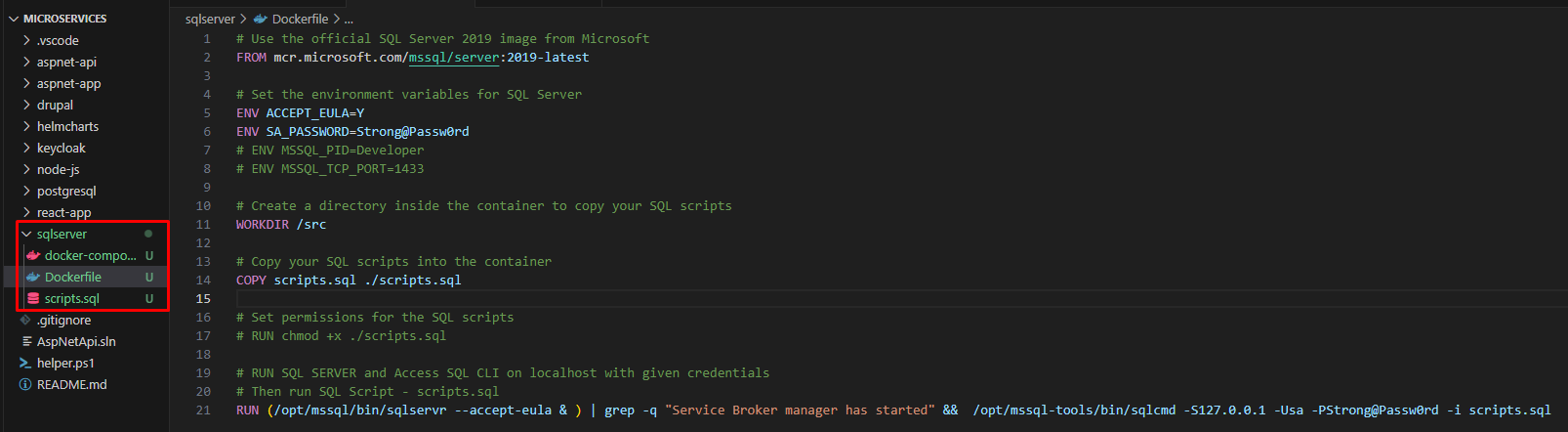

- Chapter-8: Create your First Database with SQL Server

- Benefits of SQL Server Container

- Step-1: Setup Git Repository for SQL Server database

- Step-2: Create Folder Structure for SQL Server database

- Step-3: Add Dockerfiles to the Database Project

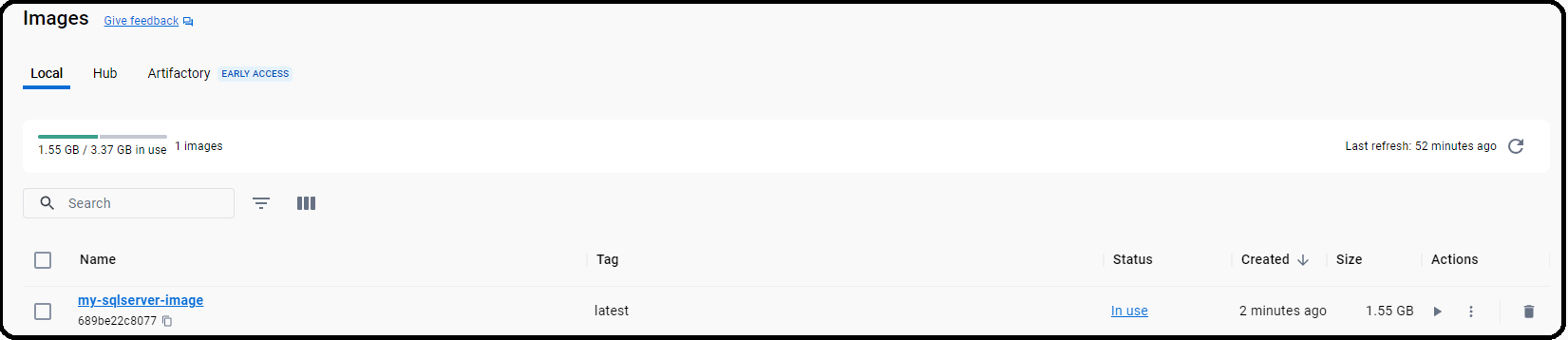

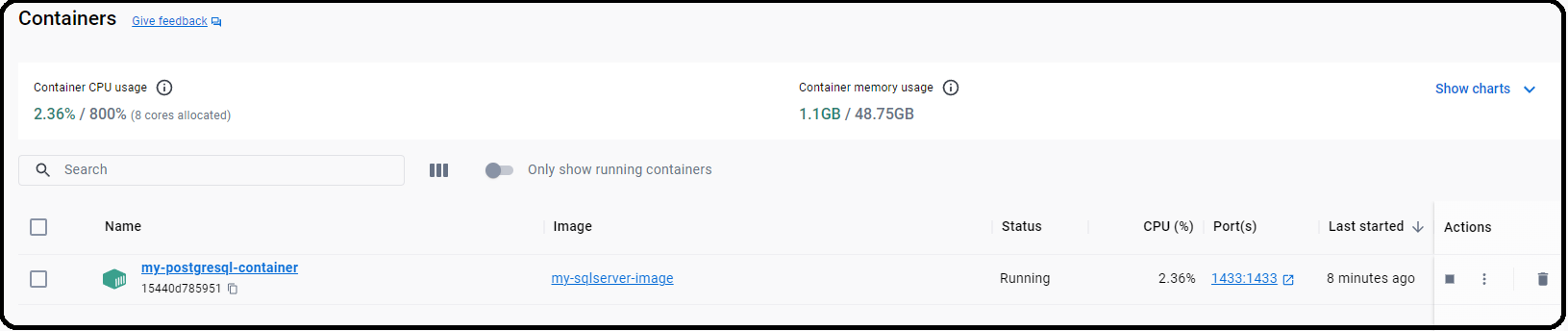

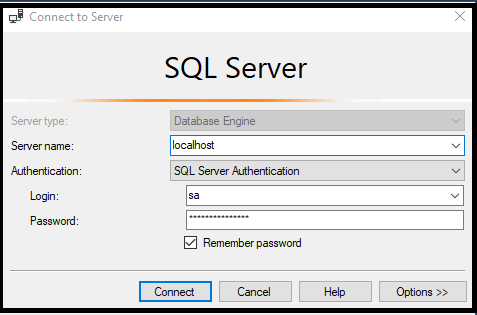

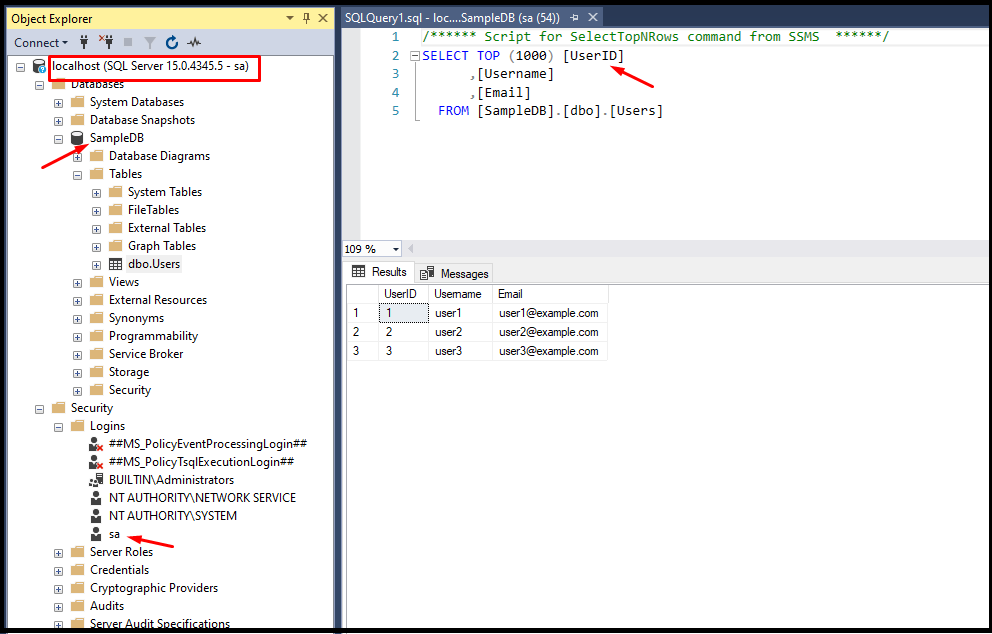

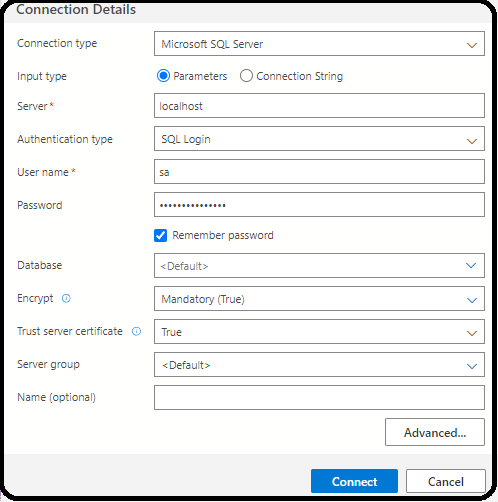

- Step-4: Test the SQL Server database connection using SSMS

- Step-5: Test the SQL server database connection using Azure Data Studio

- Step-6: Push Docker Container to ACR

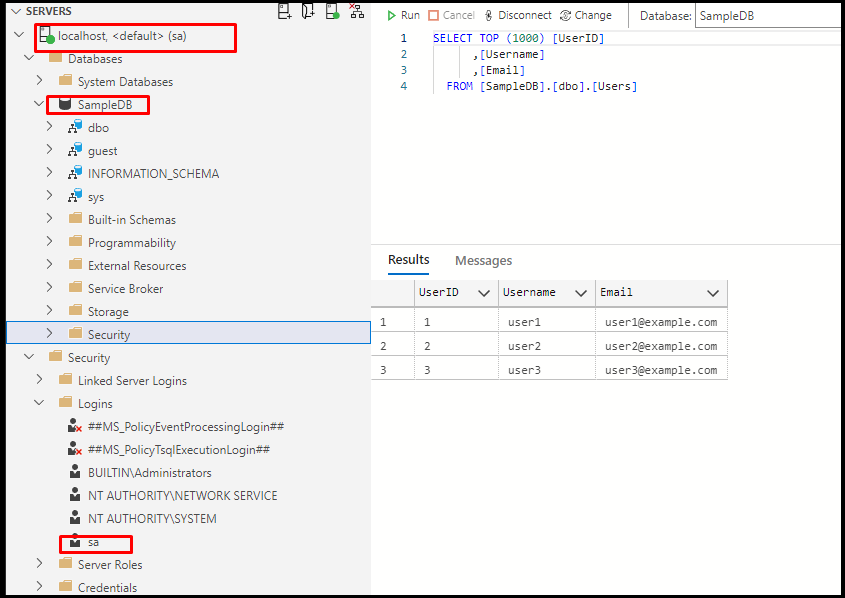

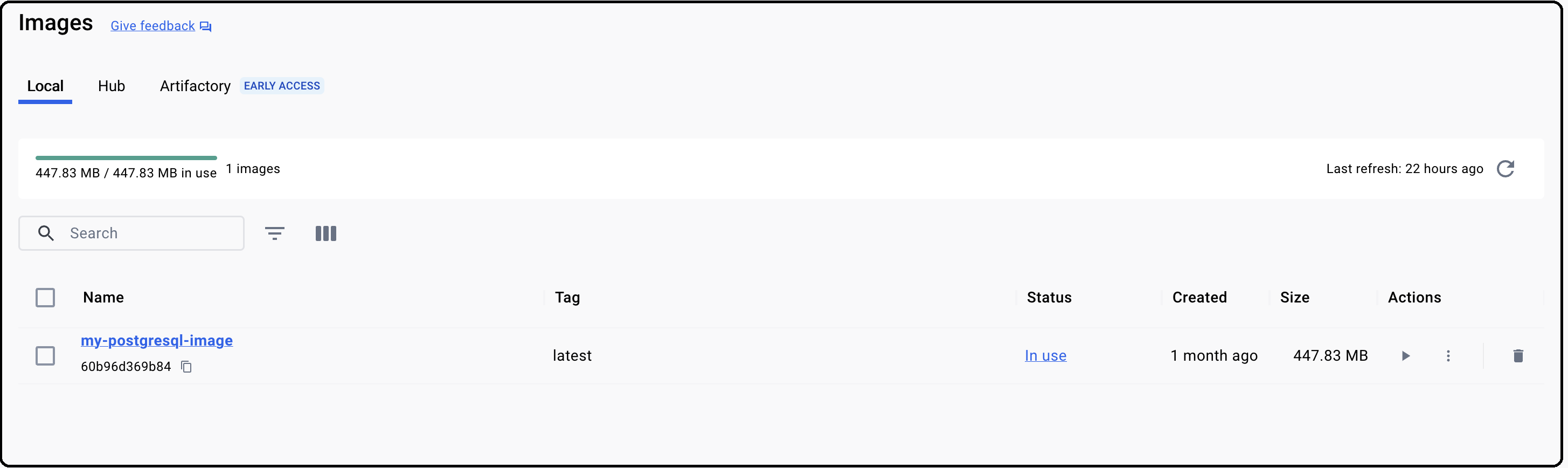

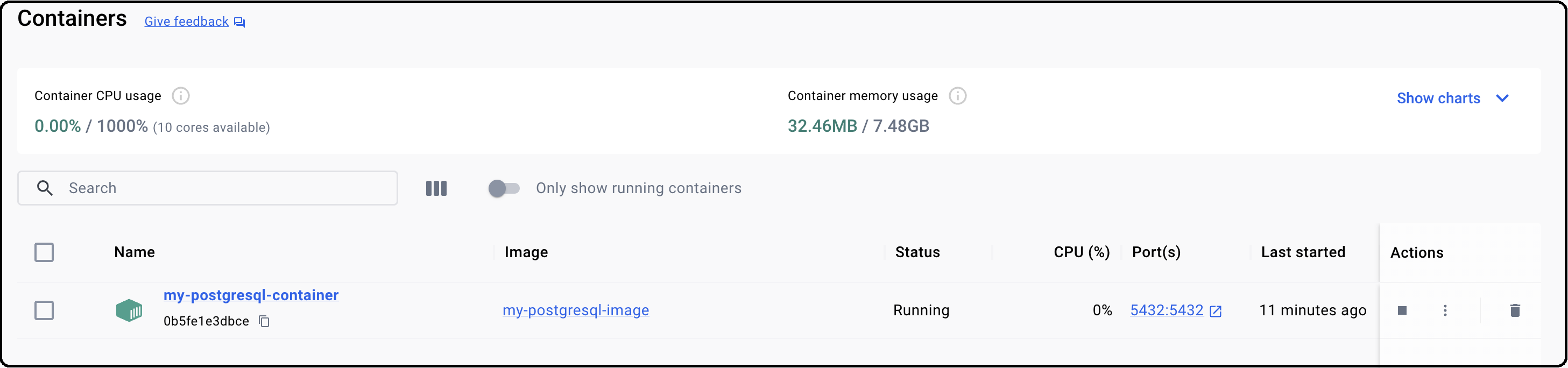

- Chapter-9: Setting up PostgreSQL database in a Docker Container

- Step-1: Setup Git Repository for PostgreSQL database

- Step-2: Create Folder Structure for PostgreSQL database

- Step-3: Add Dockerfiles to the Database Project

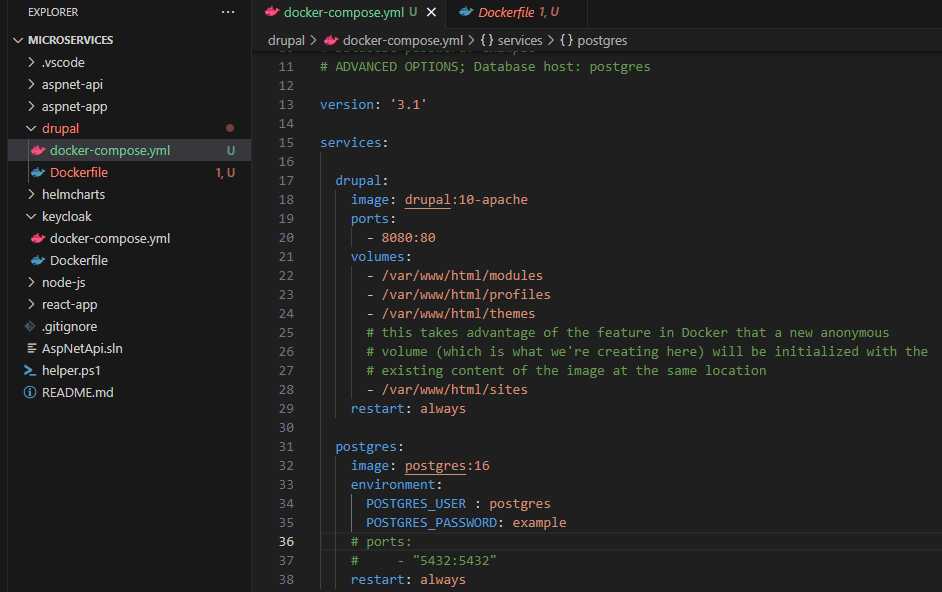

- Step-4: Create Docker Compose file

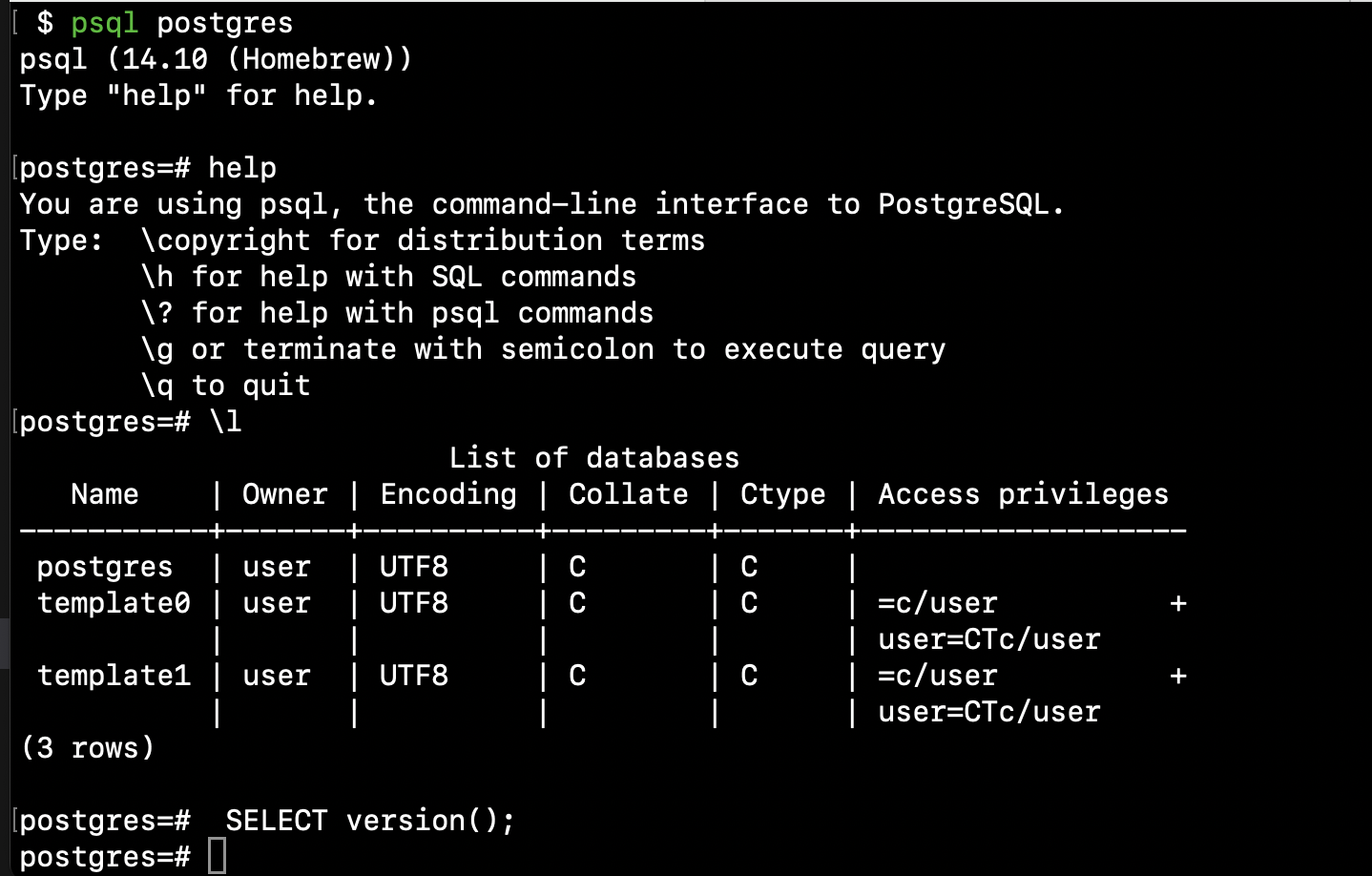

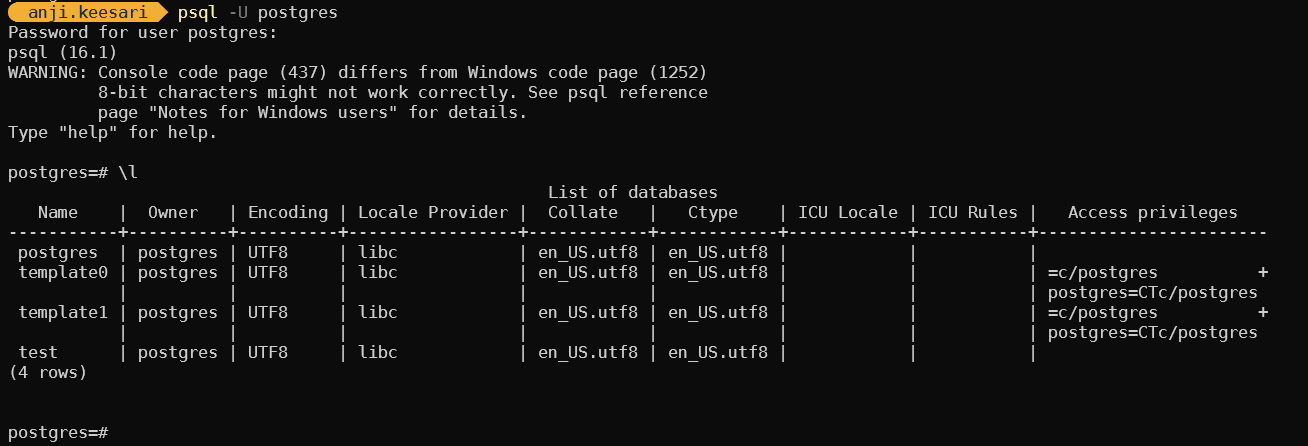

- Step-5: Test the PostgreSQL database connection from psql tool

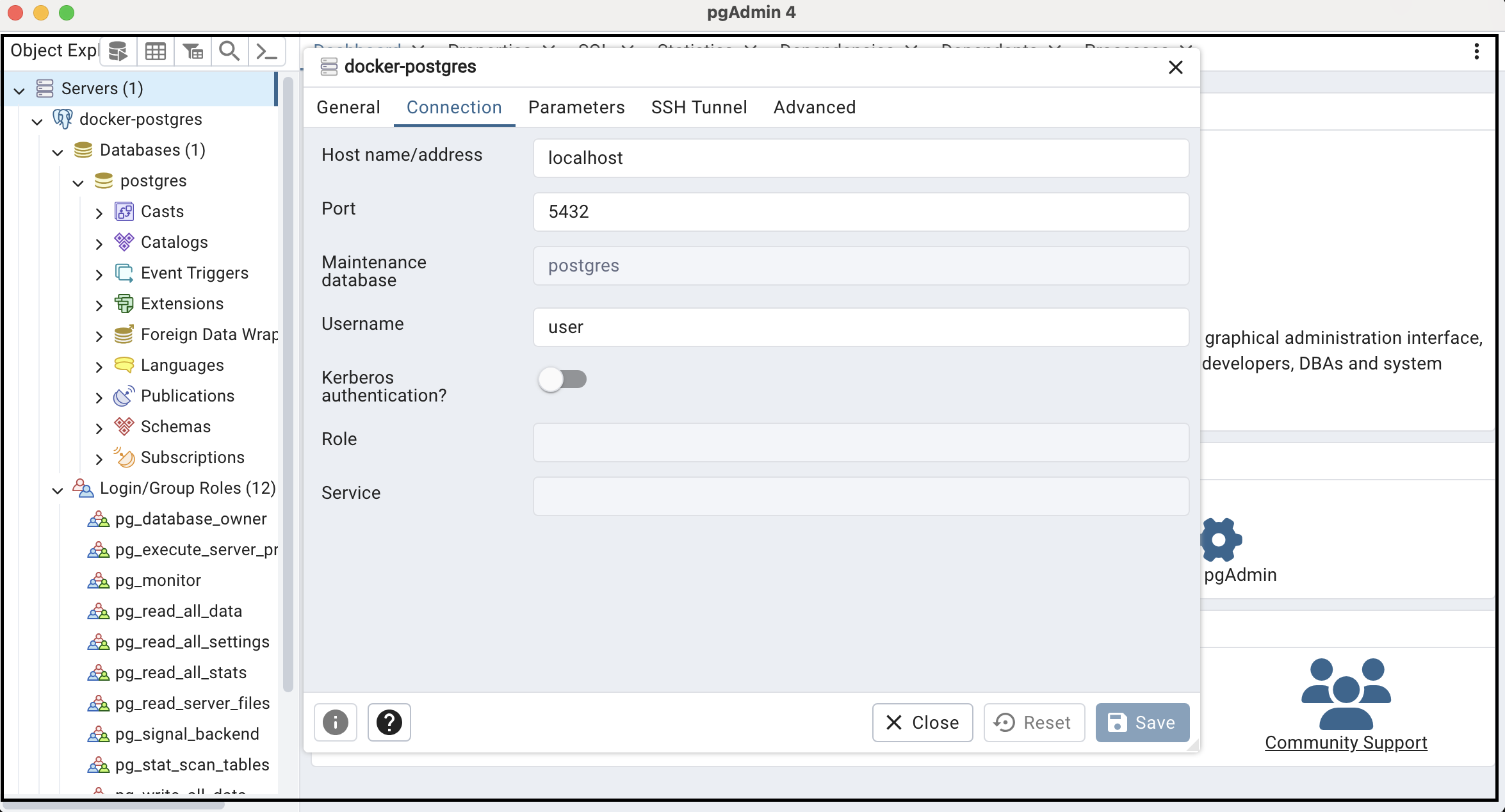

- Step-6: Test the PostgreSQL database from pgadmin4 tool

- Step-7: Push Docker Container to ACR

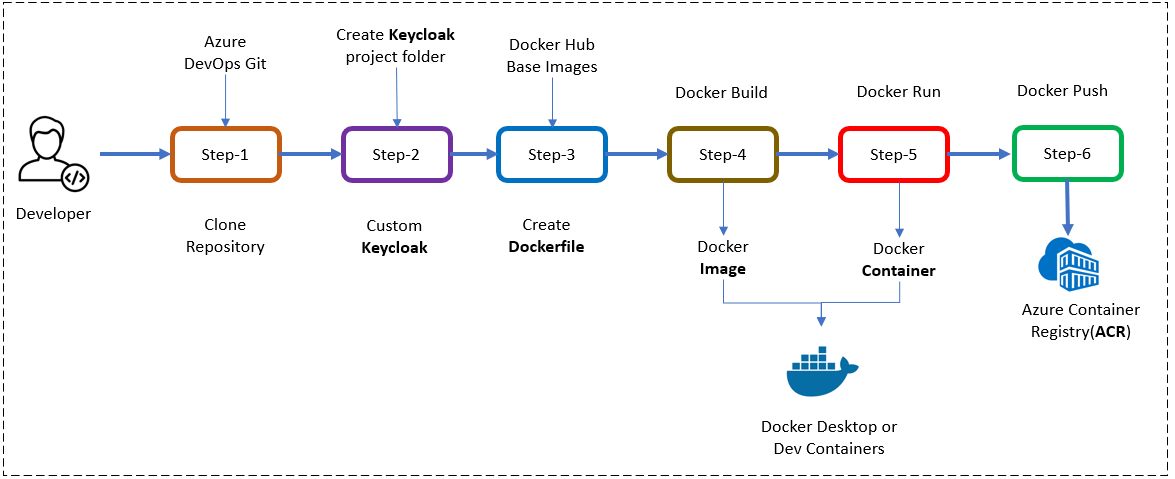

- Chapter-10: Setting up Keycloak in a Docker Container

- Step-1: Setup repository for Keycloak in Azure DevOps

- Step-2: Create Keycloak project

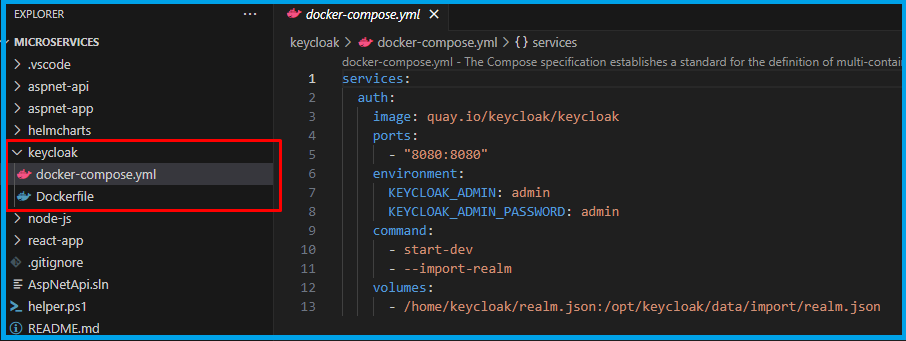

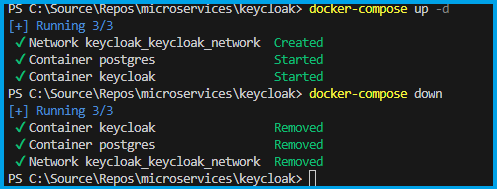

- Step-3: Keycloak setup with docker compose

- Step-3.1: Setup Keycloak Service

- Step-3.2: Setup Keycloak Service with PostgreSQL database

- Step-4: Keycloak setup with Dockerfile

- Step-4.1: Create Dockerfile

- Step-4.2: Docker build locally

- Step-4.3: Docker run locally

- Step-5: Publish the Keycloak docker container to container registry

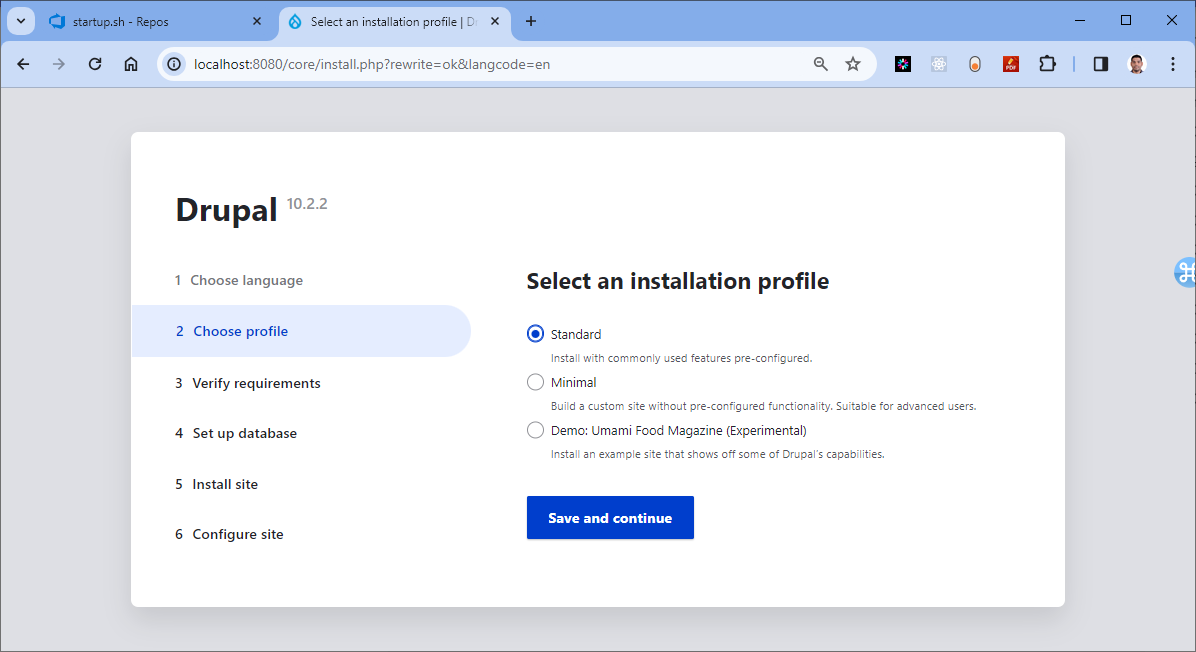

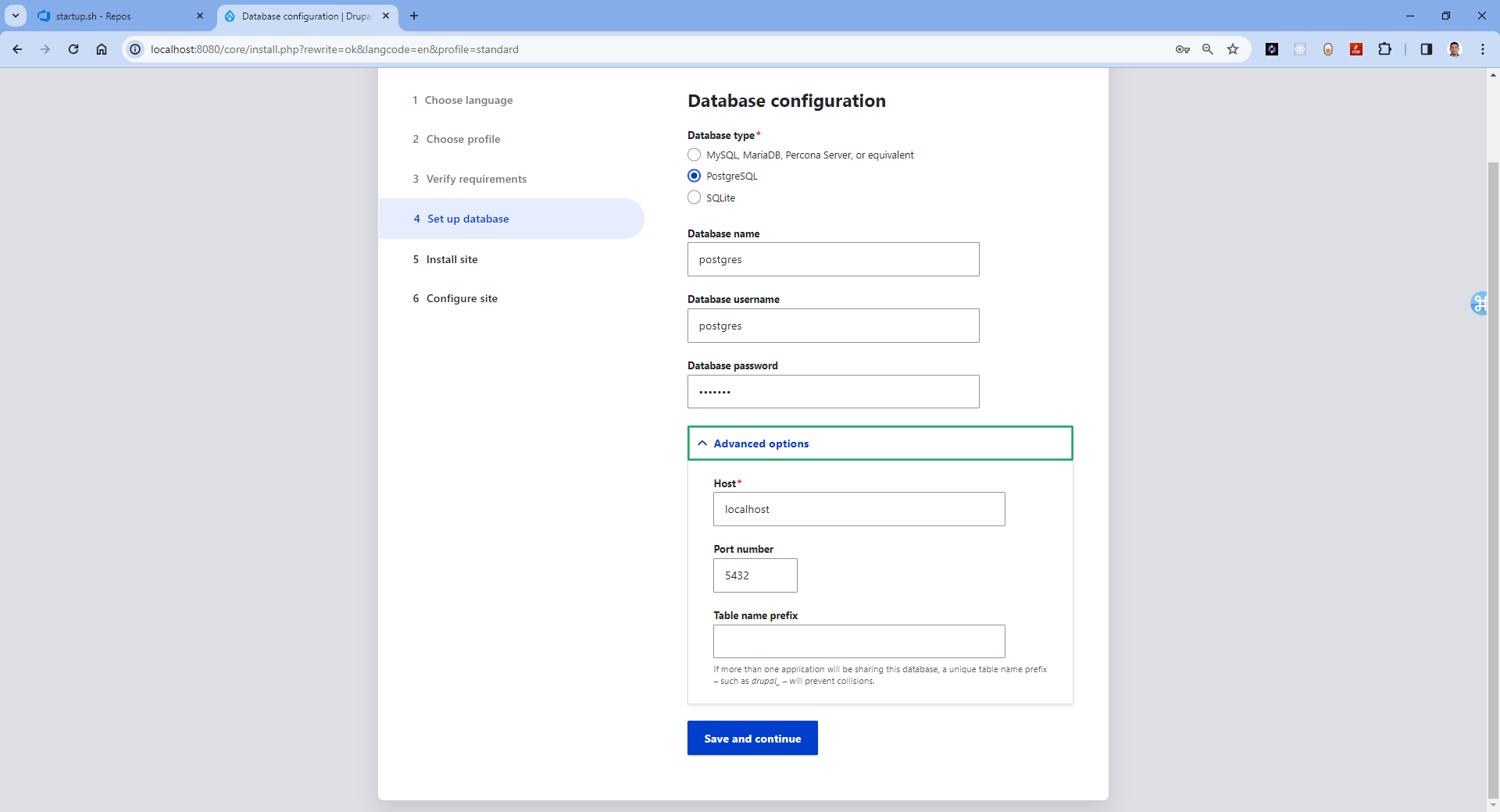

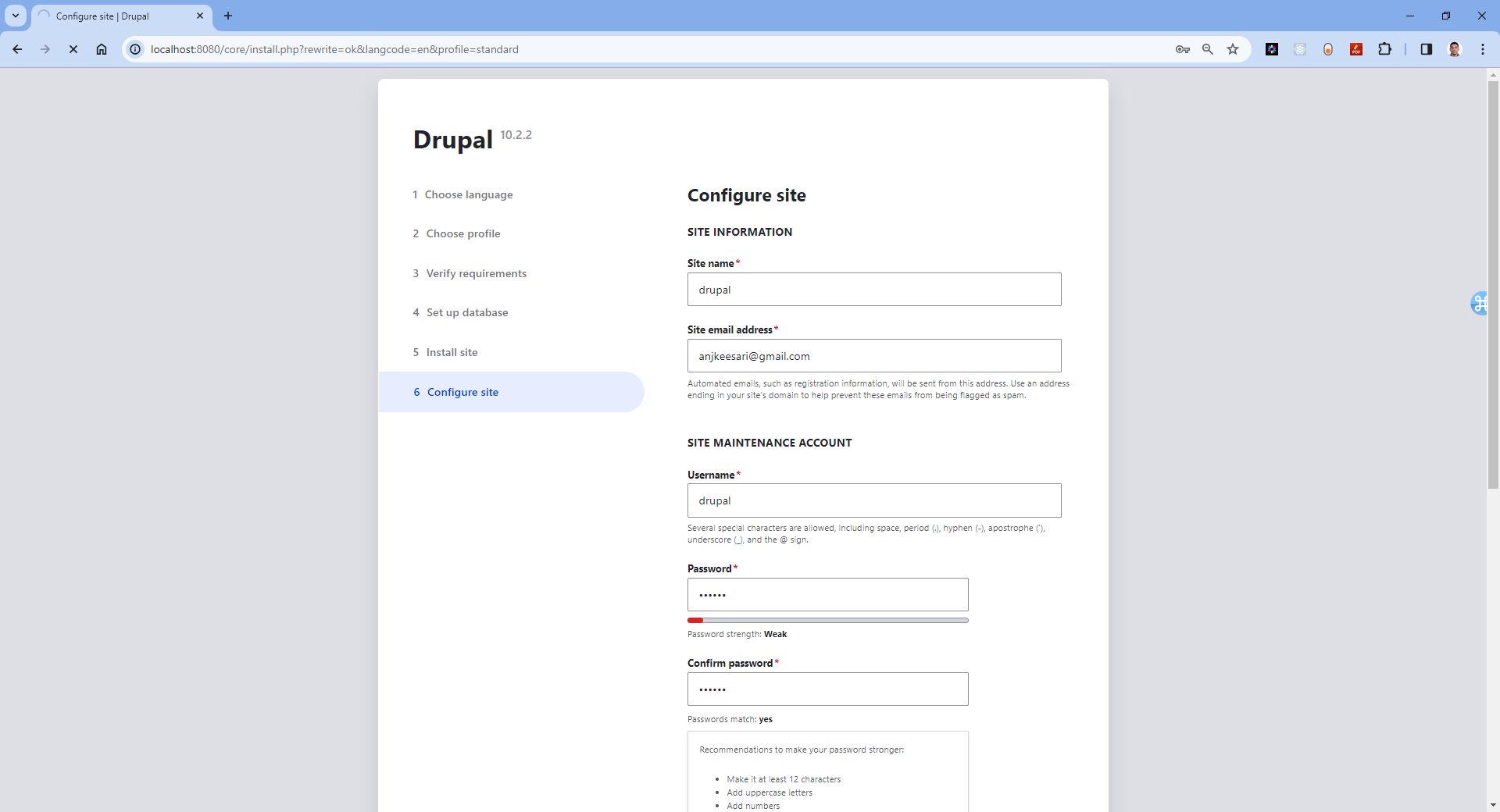

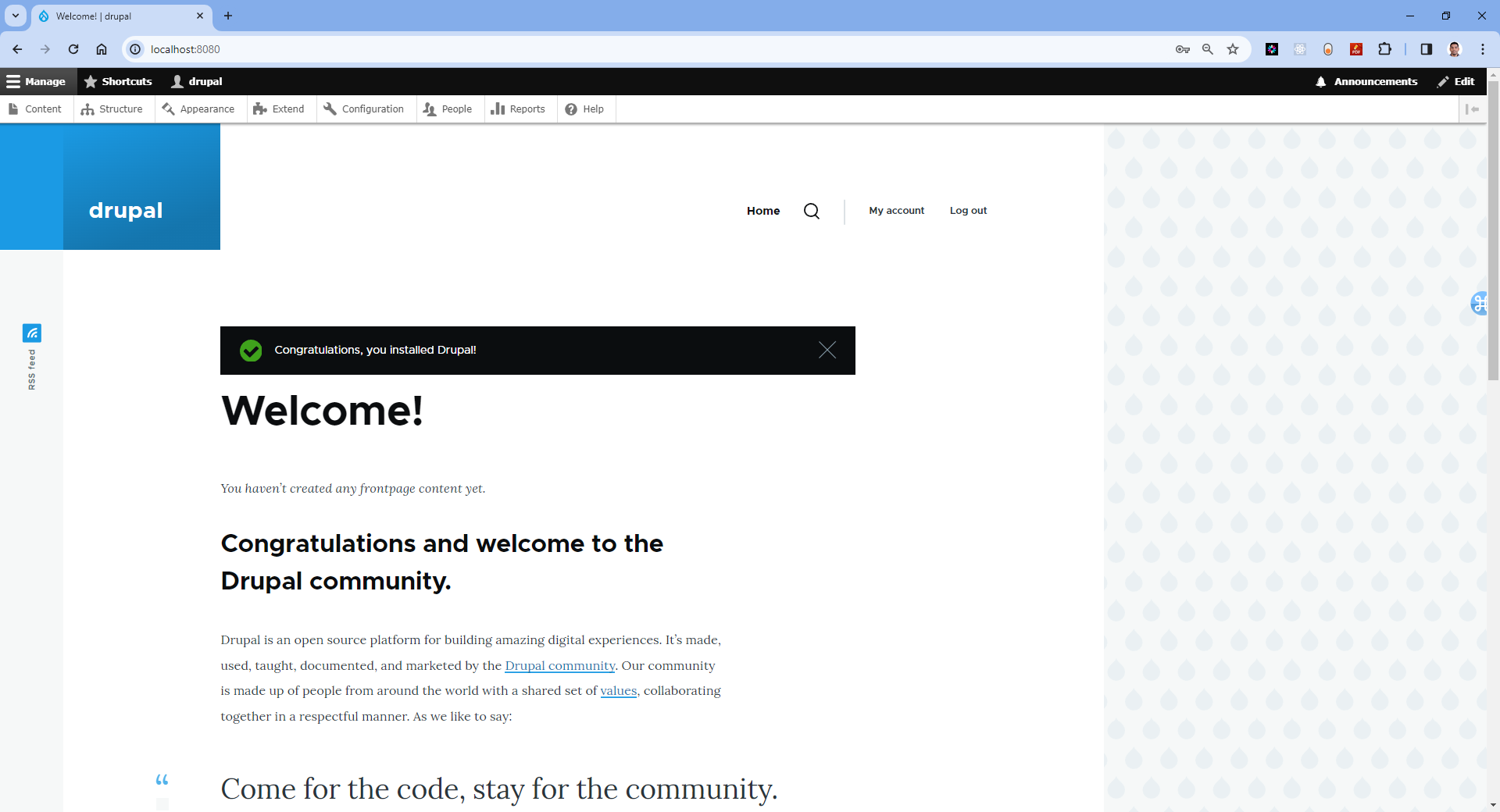

- Chapter-11: Setting up Drupal in a Docker Container

- Appendix:

About the Author

Anji Keesari is a software engineer and cloud architect with over 20 years of experience in the technology industry. He has been involved in numerous projects related to cloud computing, microservices architecture, and technologies such as Kubernetes, Terraform, and containers.

Anji has hands-on experience deploying and managing Kubernetes clusters in production environments. He is also proficient in tools like ArgoCD and Helm, utilizing them to deploy microservices applications on Kubernetes. Anji also has extensive knowledge of containers and containerization technologies, including Docker and container orchestration tools such as Kubernetes.

Apart from his expertise in Kubernetes and related tools, Anji has a strong background in Terraform, utilizing it to deploy infrastructure on various cloud platforms, including Azure and AWS.

Anji has a passion for teaching and sharing his knowledge with others. He has written numerous articles and tutorials on Kubernetes, ArgoCD, and Helm, and published in Medium website.

With his extensive knowledge and experience in Kubernetes, ArgoCD, and Helm, Anji is the perfect author for following two books:

- Building Microservices with Containers (A Practical Guide)

- Building Scalable Kubernetes Infrastructure for Microservices (A Practical Guide)

Throughout his career, Anji have worked with various companies in diverse domains such as Banking, Healthcare, and Finance, across countries such as India, UK, and US. He is dedicated to making a significant impact in his workplace and helping others along the way.

During his free time, Anji finds joy in various activities such as playing soccer, going hiking, exploring new places, and most importantly, spending quality time with his loved ones.

For any questions, suggestions, or topic requests, feel free to drop him a message, and he'll get in touch when his schedule permits.

Contact Information:

- Email: anjkeesari@gmail.com

- Website: https://anjikeesari.com

Acknowledgments

Writing a book is a collaborative effort, and I could not have done it without the help and support of many people.

First and foremost, I would like to thank my family for their patience, understanding, and encouragement throughout this project, they scarified lot of (long) weekends. Their love and support kept me going during the long hours of writing and editing.

During this book writing I had to refer lot of online materials, I would also like to thank the many individuals and organizations who have contributed to the development of Kubernetes, ArgoCD, Helm, Terraform, and containerization technologies. Without their hard work and dedication, this book would not be possible.

Finally, I would like to thank the readers of this book for their interest and support. I hope that this book will be a valuable resource for anyone who wants to learn how to build and deploy microservices applications on a Kubernetes using ArgoCD and Helm.

Thank you all for your contributions and support.

Warm regards,

Anji Keesari

Introduction

Welcome to Building Microservices with Containers: A Practical Guide. In today's rapidly growing technological landscape, the demand for scalable, flexible, and resilient software solutions is necessary. In response to this demand, the architecture of choice for many modern applications is microservices. Microservices enables the development of complex systems as a set of small, independently deployable services.

This book is your detailed guide to understanding and implementing microservices architecture using containerization technology, specifically Docker. Whether you're a regular application developer looking to adopt microservices or a new to the technology, this book will provide you with the knowledge and hands-on experience necessary to succeed in building scalable and maintainable applications.

Why Microservices?

Before looking into the technical details, let's briefly explore why microservices have become the architecture of choice for many organizations. Microservices offer several advantages over traditional monolithic architectures, including:

- Scalability: Microservices allow individual components of an application to scale independently, enabling better resource utilization and improved performance.

- Flexibility: With microservices, teams can choose the most appropriate technology stack for each service, leading to greater flexibility and innovation.

- Resilience: Isolating services from each other reduces the impact of failures, making the overall system more resilient.

- Continuous Delivery: Microservices facilitate continuous delivery and deployment practices, enabling teams to release updates quickly and frequently.

Why Containers?

While microservices offer numerous benefits, managing a large number of services can be challenging. This is where containerization comes into play. Containers provide lightweight, portable, and isolated environments for running applications, making it easier to package, deploy, and manage microservices at scale. Docker, one of the most popular containerization platforms, has revolutionized the way developers build, ship, and run applications.

What You'll Learn

In this book, we'll start by covering the fundamentals of microservices architecture and Docker containerization. We'll then guide you through the process of building and deploying microservices using a variety of technologies, including .NET Core, Node.js, React.js, and SQL databases. Along the way, you'll learn how to:

- Containerize microservices using Docker.

- Orchestrate containers with Docker Compose.

- Implement authentication and authorization using Keycloak.

- Build web applications with popular frameworks like .NET Core MVC and React.js.

- Set up and manage databases within containers using SQL Server and PostgreSQL.

- Deploy and scale microservices in a production environment.

Each chapter includes practical, hands-on tutorials and real-world examples to help reinforce your understanding of the concepts covered. By the end of this book, you'll have the knowledge and skills to design, build, and deploy microservices-based applications with confidence.

Who This Book Is For

This book is designed for developers, architects, and DevOps engineers who are interested in adopting microservices architecture using containerization technology. Whether you're new to microservices or looking to expand your knowledge, this book will provide you with the essential tools and techniques to succeed in today's growing software development landscape.

Developers:

- If you're a developer looking to moving from traditional monolithic architectures to microservices, this book will provide you with the necessary knowledge and practical skills to design, develop, and deploy microservices-based applications using containerization technology.

- Whether you specialize in a specific programming language or framework, the hands-on tutorials and real-world examples in this book will help you gain a deeper understanding of how to implement microservices using a variety of technologies, including .NET Core, Node.js, React.js, SQL databases, and more.

Architects:

- For architects responsible for designing and planning the architecture of modern applications, this book will serve as a comprehensive guide to understanding the principles, patterns, and best practices of microservices architecture.

- You'll learn how to design scalable, resilient, and maintainable systems using microservices and containerization technology, and how to address common challenges such as service discovery, communication, and data management in distributed environments.

DevOps Engineers:

- If you're a DevOps engineer tasked with managing the deployment, scaling, and monitoring of microservices-based applications, this book will help you with the necessary skills to leverage containerization tools like Docker and orchestration platforms like Kubernetes.

- You'll learn how to automate the deployment process, implement continuous integration and continuous delivery (CI/CD) pipelines, and ensure the reliability and performance of microservices in production environments.

Students and Researchers:

- This book can also be valuable for students and researchers studying software engineering, and cloud computing. It provides a practical, hands-on approach to learning about microservices architecture and containerization technology, with real-world examples and case studies to illustrate key concepts.

Key Benefits of Reading This Book:

Building Microservices with Containers: A Practical Guide offers a lot of benefits to readers at various stages of their application development journey in understanding and implementing microservices architecture with containerization technology. Here are some key benefits you can expect from reading this book:

Hands-On Tutorials:

- Benefit from step-by-step tutorials and real-world examples that guide you through the process of building and deploying microservices using Docker containers.

- Gain practical experience by working on hands-on exercises and projects designed to reinforce your learning and enhance your skills.

Diverse Technology Stack:

- Explore a diverse range of technologies and frameworks, including .NET Core, Node.js, React.js, SQL databases, Docker, and Kubernetes.

- Learn how to choose the right tools and technologies for your specific use case, and how to integrate them effectively to build scalable and resilient applications.

Transition from Monolithic to Microservices:

- Understand the benefits and challenges of transitioning from monolithic architectures to microservices, and how to plan and execute a successful migration strategy.

- Learn how to decompose monolithic applications into smaller, loosely-coupled services, and how to leverage containerization to improve scalability, flexibility, and resilience.

Whether you're a developer, architect, DevOps engineer, student, or researcher, Building Microservices with Containers: A Practical Guide offers valuable insights, practical skills, and career advancement opportunities that will empower you to succeed in today's dynamic and fast-paced software development landscape.

Hands-On Labs

Here is the high-level list of labs we will cover in this chapter:

Lab-1: Getting Started with Microservices - In this lab, we'll introduce you to the concept of microservices and explain their importance in modern application development. You'll gain a high-level understanding of microservices architecture and its benefits.

Lab-2: Getting Started with Docker - Here, we'll look into Docker, the modern containerization technology. You'll learn how to install docker, run your first container, and explore basic docker commands.

Lab-3: Create your First Containerized Microservice with .NET Core - This lab guides you through creating a microservice using .NET Core and containerizing it with Docker. You'll learn how to write Dockerfiles and build container images for .NET Core microservices.

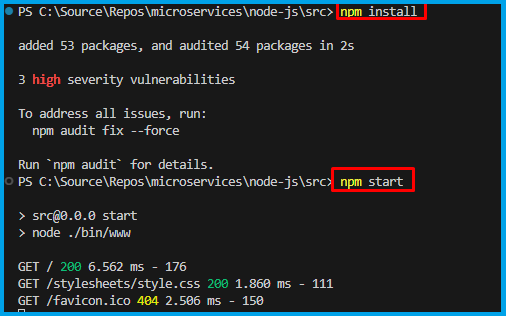

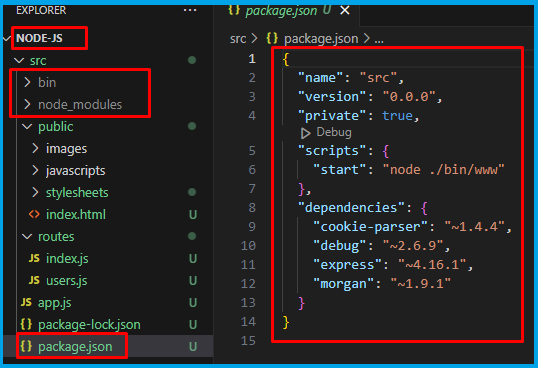

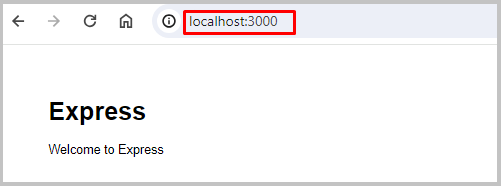

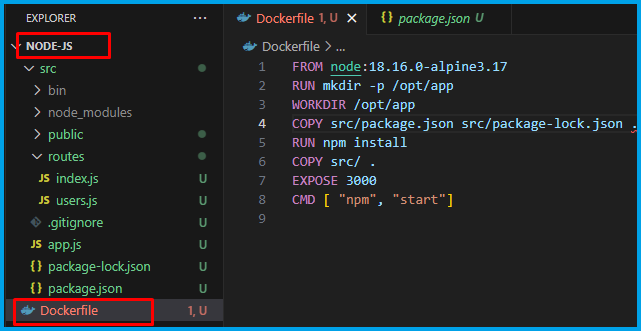

Lab-5: Create your Second Containerized Microservice with Node.js - In this lab, we switch gears to Node.js and create another microservice. You'll containerize a Node.js-based microservice and understand the differences compared to .NET Core.

Lab-6: Create your First Containerized Website using ASP.NET Core MVC - Now, it's time to create a containerized website using ASP.NET Core MVC. You'll build a web application, package it as a Docker image, and run it as a container.

Lab-7: Create your Second Containerized Website using React JS - In this lab, we'll focus on front-end development by creating a React.js-based website. You'll containerize a React application and understand how to work with front-end containers.

Lab-8: Create your First Database with SQL Server - Databases are an essential part of microservices. In this lab, we'll set up a SQL Server database within a container. You'll learn how to create and connect to containerized databases.

Lab-9: Create your Second Database with PostgreSQL - PostgreSQL is another popular database choice. This lab guides you through running PostgreSQL in a docker container and executing scripts. You'll understand how to work with different database engines within containers.

Lab-10: Running Keycloak application in a Docker Container - External services play a important role in microservices. In this lab, we'll run Keycloak application, an identity and access management system, in a Docker container. You'll configure and interact with Keycloak within the containerized environment.

Lab-11: Running Drupal website in a Docker Container - Continuing with external services, we'll set up Drupal website, a content management system, in a Docker container. You'll explore how to work with content management systems within containers.

These hands-on labs provide a practical foundation for building and containerizing microservices. By the end of these labs, you'll have hands-on experience with various technologies and a clear understanding of how to create and run microservices and external services in containers. This knowledge will be invaluable as we progress through the chapters and explore more advanced microservices concepts and deployment strategies.

Categories of Labs:

Labs in this Chapter are categorized into four areas, these categories provide a structured approach to learning containerization across different aspects of web development, from APIs and websites to databases and external services. By completing labs in each category, participants will gain comprehensive knowledge and skills essential for modern application development practices.

- Creating Containerized APIs (API Development)

- Creating Containerized Websites (Website Development)

- Setting Up Databases in Containers (Database Containers)

- Running External Services in Docker Containers (External Services)

Creating Containerized APIs

Labs created within this category, you'll learn how to create containerized APIs using technologies like .NET Core Web API, Node.js.

.NET Core Web API:

-

Introduction to .NET Core Web API: We'll start by introducing you to .NET Core Web API, a cross-platform framework for building Restful services.

-

Setting Up an .NET Core Web API Project: We'll guide you through setting up a new .NET Core Web API project.

-

Containerization with Docker: You'll learn how to package your .NET Core Web API as a Docker container. We'll provide guidance on creating a Dockerfile for your web application.

-

Running the Containerized .NET Core Web API: You'll see how to run your containerized .NET Core Web API locally and understand how containers simplify deployment.

Node.js APIs:

-

Introduction to Node.js: Node.js is a popular JavaScript library for building Restful services. We'll introduce you to Node.js and explain its role in modern Rest APIs development.

-

Creating a Node.js Rest API: You'll learn how to create a Node.js API from scratch.

-

Containerization with Docker: Similar to .NET Core Web API, we'll guide you through containerizing your Node.js API. You'll create a Dockerfile for your Restful service.

-

Running the Containerized Node.js API: You'll see how to run your containerized Node.js API locally.

By the end of these labs, you'll have hands-on experience with .NET Core Web API and Node.js along with the knowledge of how to containerize web applications. These skills are essential as we move forward to deploy these containerized websites alongside microservices in later chapters.

Creating Containerized Websites

Labs created within this category, you'll learn how to create containerized websites using technologies like ASP.NET Core, MVC and React.js.

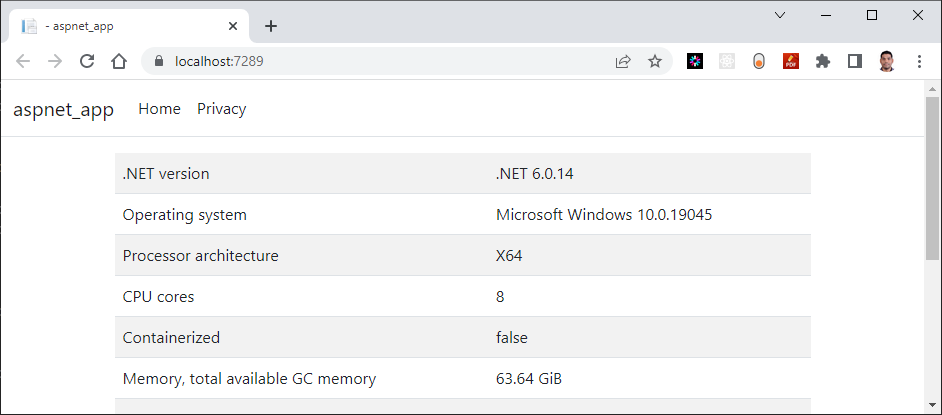

ASP.NET Core MVC:

-

Introduction to ASP.NET Core MVC: We'll start by introducing you to ASP.NET Core MVC, a cross-platform framework for building web applications. You'll understand its role in creating dynamic web content.

-

Setting Up an ASP.NET Core MVC Project: We'll guide you through setting up a new ASP.NET Core MVC project.

-

Containerization with Docker: You'll learn how to package your ASP.NET Core MVC application as a Docker container. We'll provide guidance on creating a Dockerfile for your web application.

-

Running the Containerized ASP.NET Core MVC Application: You'll see how to run your containerized ASP.NET Core MVC application locally and understand how containers simplify deployment.

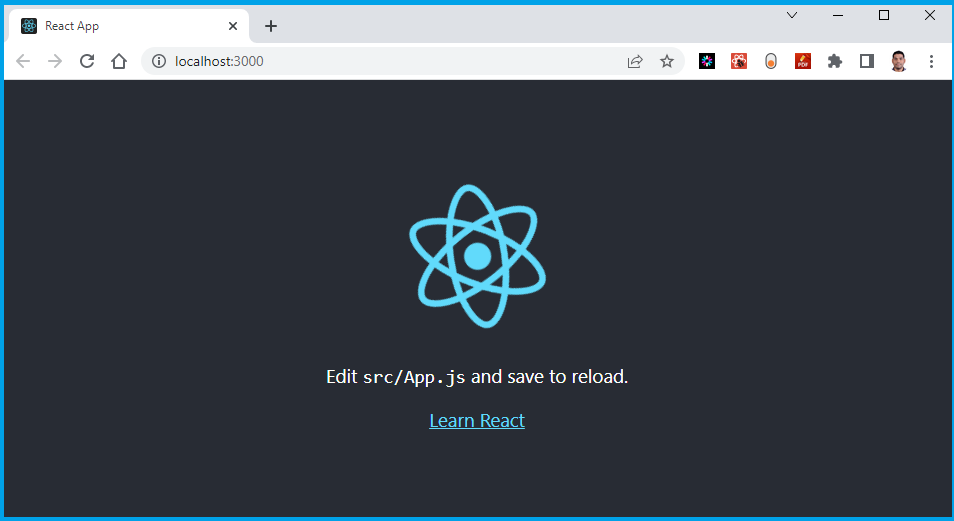

React.js:

-

Introduction to React.js: React.js is a popular JavaScript library for building user interfaces. We'll introduce you to React.js and explain its role in modern web development.

-

Creating a React.js Application: You'll learn how to create a React.js application from scratch.

-

Containerization with Docker: Similar to ASP.NET Core MVC, we'll guide you through containerizing your React.js application. You'll create a Dockerfile for your web app.

-

Running the Containerized React.js Application: You'll see how to run your containerized React.js application locally.

By the end of these labs, you'll have hands-on experience with ASP.NET Core MVC and React.js, along with the knowledge of how to containerize web applications. These skills are essential as we move forward to deploy these containerized websites alongside microservices in later chapters.

Setting Up Databases in Containers

Labs created within this category, we'll learn setting up databases within containers for microservices data storage. You'll learn how to create containerized database instances using SQL Server and PostgreSQL.

Microservices often rely on databases to store and manage data. Containerizing databases offers numerous advantages, such as isolation, portability, and versioning. In this section, we'll focus on two popular database systems: SQL Server and PostgreSQL.

SQL Server:

-

Introduction to SQL Server: We'll introduce you to SQL Server, a robust relational database management system (RDBMS) developed by Microsoft.

-

Containerization with Docker: You'll learn how to containerize SQL Server by pulling an official SQL Server Docker image from the Azure Container registry or Docker Hub.

-

Running SQL Server in a Docker Container: We'll guide you through running a SQL Server container, configuring database settings, and connecting to the containerized SQL Server instance.

-

Data Management: You'll explore data management tasks within a containerized SQL Server, such as creating databases, tables, and performing CRUD (Create, Read, Update, Delete) operations.

-

Connecting to database locally: Finally you'll explore different tools like SQL Server Management Studio (SSMS) and Azure data studio for connecting to containerized SQL Server database.

PostgreSQL:

-

Introduction to PostgreSQL: We'll introduce you to PostgreSQL, a powerful open-source relational database system known for its scalability and extensibility.

-

Containerization with Docker: You'll learn how to containerize PostgreSQL by pulling an official PostgreSQL Docker image from the Docker Hub.

-

Running PostgreSQL in a Docker Container: We'll guide you through running a PostgreSQL container, configuring database settings, and connecting to the containerized PostgreSQL instance.

-

Data Management: You'll explore data management tasks within a containerized PostgreSQL database, including creating databases, tables, and executing SQL queries.

-

Connecting to database locally: Finally you'll explore different tools like

PSQLand Pgadmin4 for connecting to containerized PostgreSQL database.

By the end of these labs, you'll have hands-on experience with containerized SQL Server and PostgreSQL databases, understanding their role in microservices data storage. These skills are important as you proceed through the chapters, where microservices will interact with these containerized databases to retrieve and store data.

Running External Services in Containers

Labs created within this category, we'll learn integration of external services into your microservices architecture. You'll learn how to run external services like Keycloak and Drupal in Docker containers, enhancing the capabilities of your microservices.

External services play a importent role in microservices architecture, providing essential functionalities such as authentication and content management. Containerizing these external services offers several advantages, including consistency and simplified deployment. In this section, we'll focus on two prominent external services: Keycloak and Drupal.

Keycloak:

-

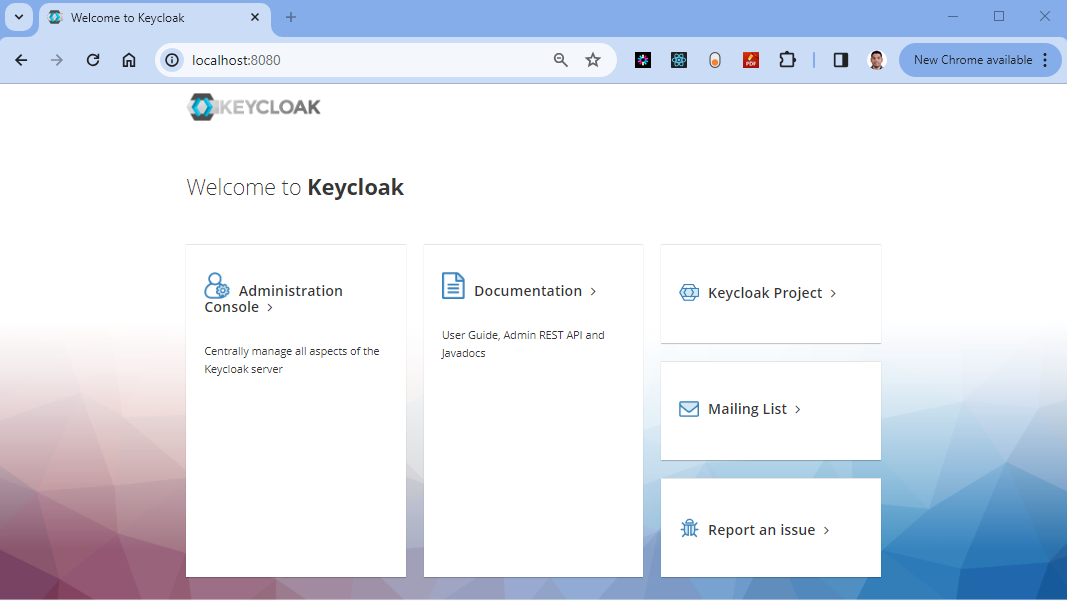

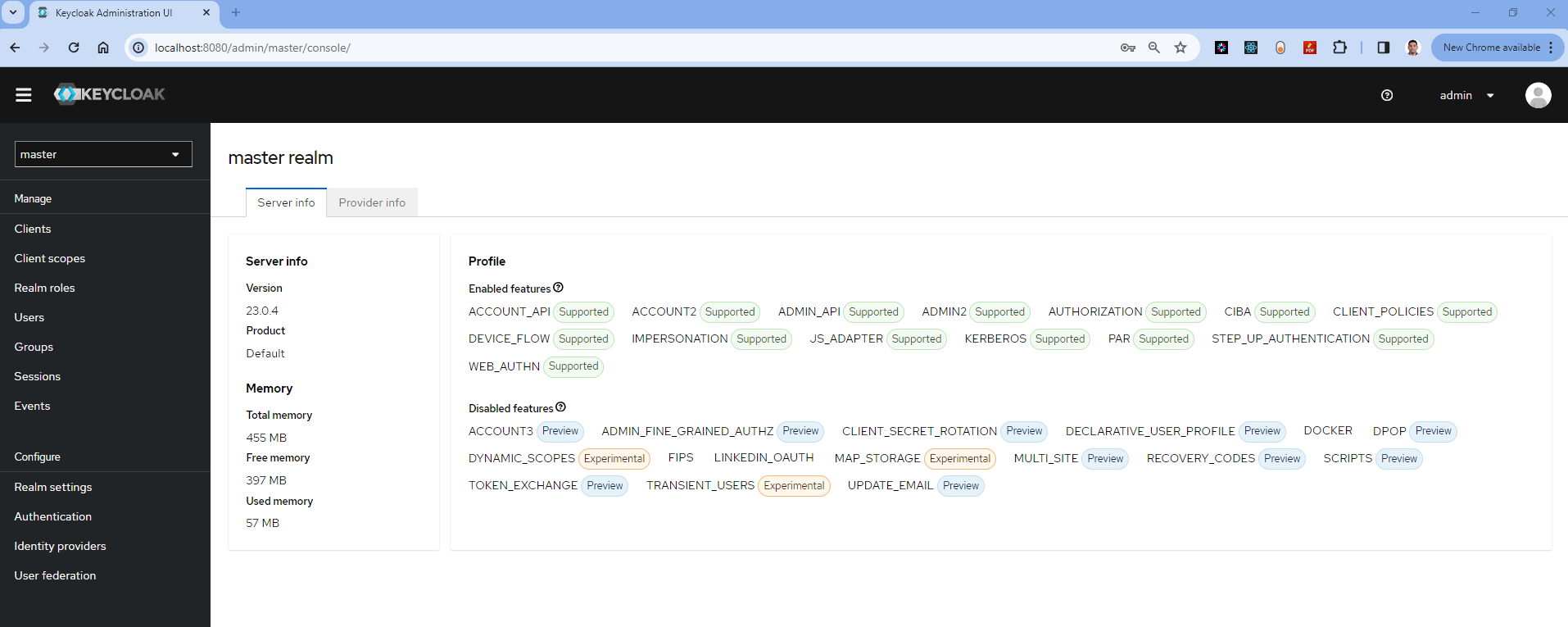

Introduction to Keycloak: Keycloak is an open-source identity and access management system. We'll introduce you to Keycloak and explain its significance in microservices authentication.

-

Containerization with Docker: You'll learn how to containerize Keycloak by pulling an official Keycloak Docker image from the Docker Hub.

-

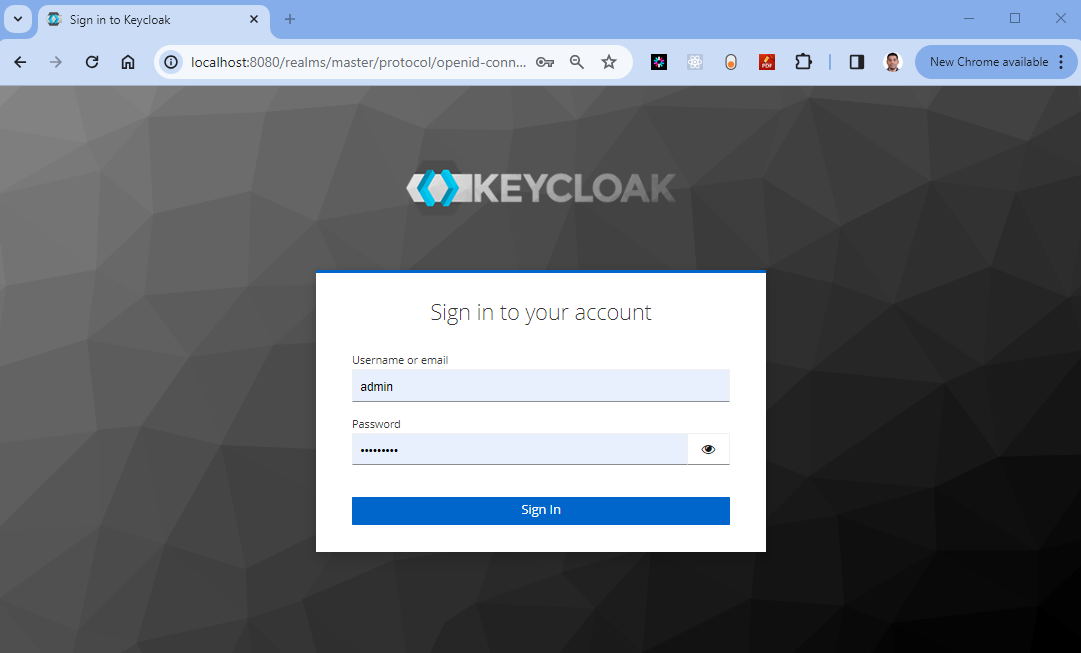

Running Keycloak in a Docker Container: We'll guide you through running a Keycloak container, configuring realms, users, and roles within the containerized Keycloak instance.

-

Testing the Keycloak Application Locally: Finally you'll see how to browse your containerized Keycloak application locally and login into admin portal and intacting with Keycloak application.

Drupal:

-

Introduction to Drupal: Drupal is a popular open-source content management system (CMS). We'll introduce you to Drupal and its role in managing content for microservices.

-

Containerization with Docker: You'll learn how to containerize Drupal by pulling an official Drupal Docker image from the Docker Hub.

-

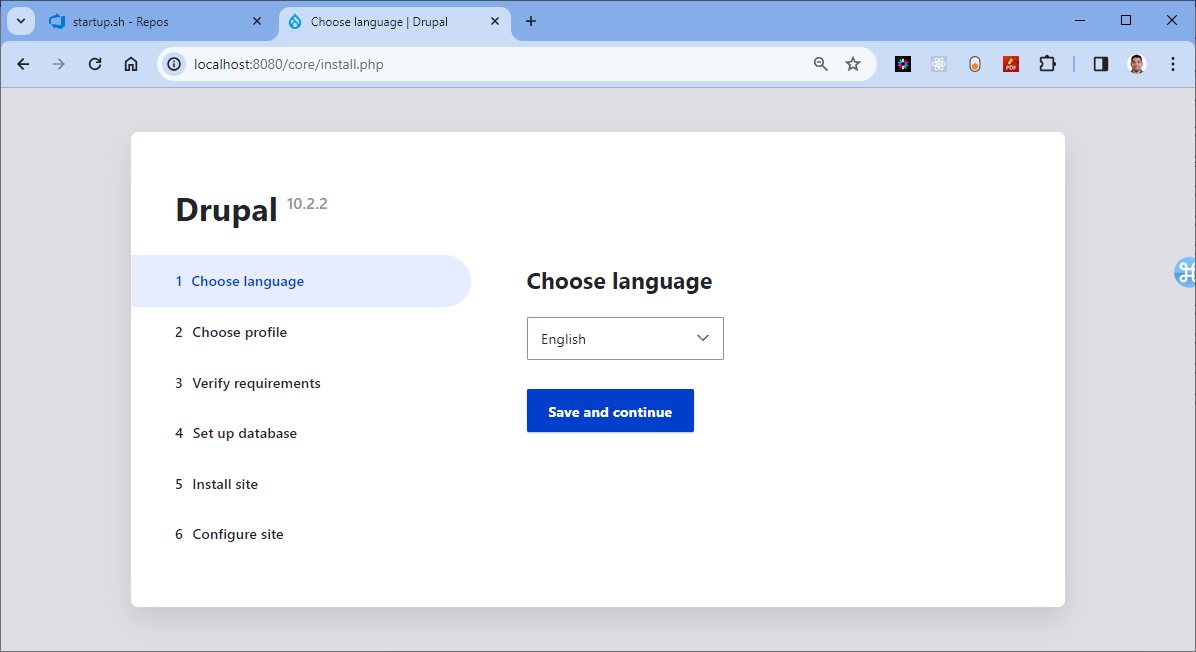

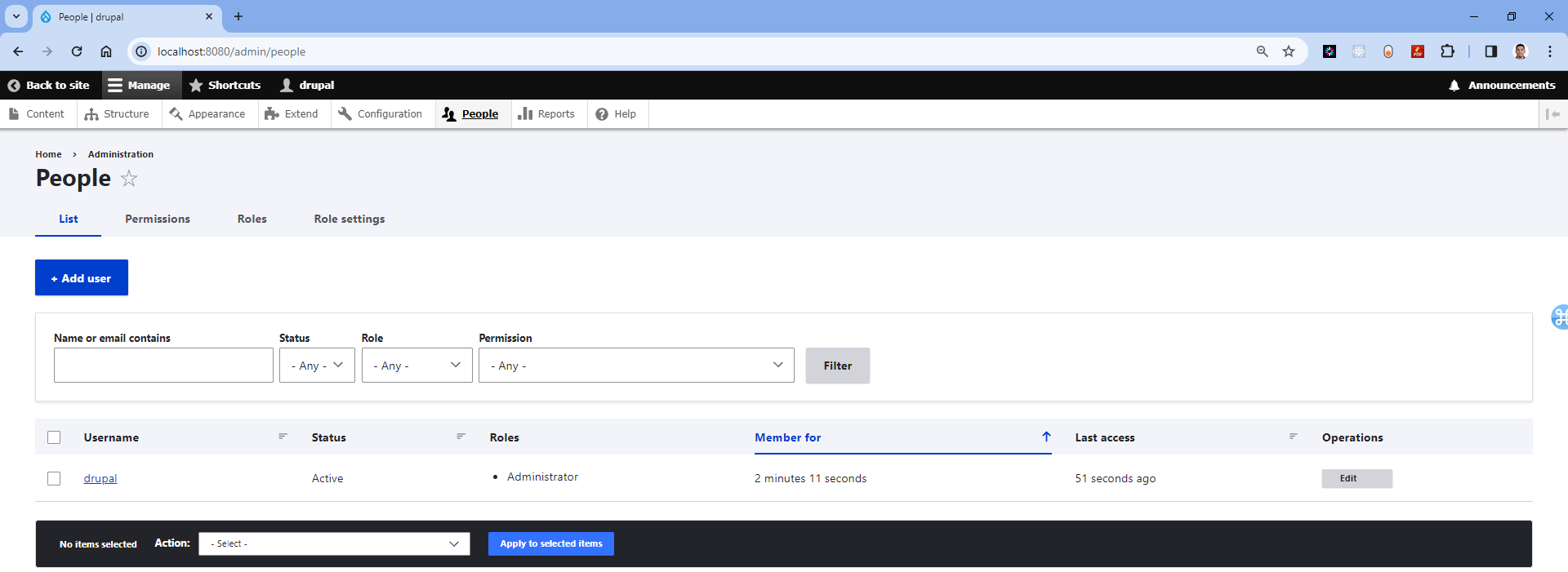

Running Drupal in a Docker Container: We'll guide you through running a Drupal container, setting up a website, and managing content within the containerized Drupal instance.

-

Testing the Drupal Website Locally: Finally you'll see how to browse your containerized Drupal Website locally and login into drupal portal and intacting with drupal website.

By the end of these labs, you'll have hands-on experience with containerized Keycloak and Drupal instances, understanding how to integrate them seamlessly into your microservices ecosystem. These skills are essential as you proceed through the chapters, where microservices will rely on these external services for authentication, authorization, and content management.

Microservices

Chapter-1: Getting Started with Microservices

Overview

Welcome to the first chapter of our book. In this chapter, we will begin our journey by understanding microservices architectures and how they are different comparing with traditional monolithic architectures. We'll also learn the advantages of the microservices architectures, including scalability, flexibility, and easier maintenance. we will also learn challenges with microservices and considerations that need to be carefully addressed, key technologies and communication patterns. Finally we will perform four tasks such as identifying list of microservices, git repos needed, create org and project in azure devops to continue our journey in this book.

Objective

In this exercise, our objective is to accomplish and learn the following tasks:

- What are Microservices?

- Microservices vs Monolithic Architectures

- Advantages of Microservices

- Challenges and Considerations

- Key Technologies and Tools

- Microservices Communication

- Domain-Driven Design (DDD)

- Task-1: Identify Microservices for the book

- Task-2: Identify the List of Git Repositories Needed

- Task-3: Create new Azure DevOps Organization

- Task-4: Create new Azure DevOps Project

What are Microservices?

Microservices are architectural style that structures an application as a collection of small, independent, and loosely coupled services. These services, known as microservices , are designed to be self-contained and focused on specific functions or features of the application. Unlike monolithic applications, where all components are tightly integrated into a single codebase, microservices allow for the decomposition of an application into smaller, manageable parts.

Key Characteristics:

- Autonomous and independently deployable

- Organized around business capabilities

- Technology-agnostic (can be built using different languages/platforms)

- Decentralized data management

- Fault isolation

Microservices vs Monolithic Architectures

Monolithic Architectures:

- In a monolithic architecture, the entire application is built as a single, unified codebase.

- All components of the application, including user interfaces, business logic, and data access layers, are tightly coupled.

- Scaling a monolithic application typically involves replicating the entire application, even if only specific parts require additional resources.

- Maintenance and updates often require making changes to the entire codebase, making it challenging to isolate and fix issues.

Microservices:

- Microservices architecture promotes breaking down the application into smaller, independent services.

- Each microservice is responsible for a specific application's functionality.

- Microservices can be developed, deployed, and scaled independently.

- Updates and maintenance are easier to manage, as changes to one microservice do not impact the entire system.

Advantages of Microservices

Microservices architecture offers several advantages, including:

-

Scalability: Microservices can be easily scaled horizontally to handle increased traffic, ensuring that the system remains responsive during high-demand periods.

-

Flexibility: Developers can work on individual microservices without affecting the entire application. This makes it easier to introduce new features, fix bugs, or update a specific service without disrupting the entire system.

-

Easy Maintenance: Smaller, self-contained services are typically easier to maintain and manage. Updates and changes can be isolated to specific microservices, reducing the risk of unintended consequences.

-

Improved Fault Isolation: When a microservice fails, it usually doesn't bring down the entire system. Failures are contained within the affected service, minimizing the impact on the overall application.

-

Technology Agnosticism: Microservices allow you to use different technologies and programming languages for different services, which can be chosen based on the specific requirements of each service.

-

Rapid Development: Smaller teams can work independently on microservices, enabling faster development cycles and quicker time-to-market for new features or products.

-

Enhanced Testing: Isolated microservices can be tested more thoroughly, leading to better quality assurance and reduced testing complexity compared to monolithic applications.

-

Easier Deployment: Smaller, independent services are easier to deploy, reducing the risk of deployment failures and making it possible to implement continuous integration and continuous delivery (CI/CD) practices.

Challenges and Considerations

While microservices offer numerous advantages, they also come with their set of challenges and considerations that need to be carefully addressed. Careful planning and architectural decisions are important for realizing the benefits of microservices while mitigating their challenges.

Challenges of microservices

-

Complexity: Microservices introduce complexity, as an application is divided into multiple services, each with its own codebase, data store, and dependencies. Managing the interactions between microservices and ensuring the overall system's integrity can be challenging.

-

Data Consistency: Maintaining data consistency in a distributed microservices architecture can be complex. With each microservice managing its data, ensuring data synchronization and integrity across services is important.

-

Distributed Systems Issues: Microservices are inherently distributed, which introduces challenges such as network latency, message serialization, and handling communication failures. Implementing robust error handling and resilience mechanisms becomes essential.

-

Operational Complexity: Managing and monitoring a large number of microservices in a production environment can be operationally complex. Tools and practices for deployment, monitoring, and scaling need to be in place to ensure smooth operations.

Considerations for Microservices Adoption

-

Application Complexity: Microservices are well-suited for complex, large-scale applications with multiple modules or functionalities. For simpler applications, a monolithic architecture may be more appropriate.

-

Team Structure: Consider your organization's team structure. Microservices often align with small, cross-functional teams that can own and manage individual microservices. Ensure your teams have the necessary skills for microservices development and operations.

-

Scalability and Performance: Microservices can provide scalability benefits, particularly for applications with varying workloads. Evaluate whether your application requires the ability to scale individual components independently.

-

Frequent Updates: If your application requires frequent updates and releases, microservices can support continuous integration and deployment practices. Ensure you have the necessary CI/CD pipelines and infrastructure.

Choosing the Right Architecture

The choice between monolithic and microservices architecture depends on various factors, including the complexity of the application, team structure, scalability requirements, and development speed. Monolithic architectures excel in simplicity and are suitable for smaller applications with straightforward requirements. Microservices, on the other hand, offer flexibility and scalability for larger, more complex applications but introduce operational complexities.

Key Technologies and Tools

Microservices development relies on a set of essential technologies and tools that facilitate the creation, deployment, and management of individual microservices.

-

Docker: Docker is a containerization platform that allows developers to package applications and their dependencies into lightweight containers. Docker containers provide consistency in deployment across different environments, ensuring that microservices run reliably on any system.

-

DevContainers: DevContainers streamline the development and testing of microservices locally by providing a controlled, isolated, and consistent environment that enhances collaboration among team members and simplifies the management of complex microservices ecosystems.

-

Kubernetes: Kubernetes is a container orchestration platform that automates the deployment, scaling, and management of containerized applications, including microservices. Kubernetes simplifies the management of microservices at scale, enabling features like load balancing, auto-scaling, and rolling updates.

-

API Gateways: API gateways act as a front-end for microservices, providing a unified entry point for clients and handling tasks such as authentication, rate limiting, and request routing. API gateways simplify client interactions with microservices, centralize security controls, and enable API versioning and documentation.

-

Continuous Integration/Continuous Deployment (CI/CD) Tools: CI/CD tools such as Azure DevOps, Argocd, Helmcharts automate the building, testing, and deployment of microservices, supporting rapid development and delivery. CI/CD pipelines streamline the development process, allowing for frequent updates and reducing the risk of errors.

-

Monitoring and Observability Tools (e.g., Prometheus, Grafana, Jaeger): Monitoring and observability tools provide insights into the performance, availability, and behavior of microservices, helping to detect and troubleshoot issues. These tools ensure the reliability of microservices in production by offering real-time monitoring, logging, and tracing capabilities.

Microservices Communication

Microservices can communicate with each other using different communication patterns, both synchronous and asynchronous.

Synchronous:

-

HTTP/HTTPS: Microservices can communicate over standard HTTP/HTTPS protocols, making it easy to create RESTful APIs or web services. Synchronous communication is suitable for scenarios where immediate responses are required.

-

gRPC: gRPC is a high-performance, language-agnostic remote procedure call (RPC) framework that allows microservices to communicate efficiently. It is ideal for scenarios where low-latency, binary-encoded communication is needed.

Asynchronous:

-

Message Queues (e.g., RabbitMQ, Apache Kafka): Microservices can exchange messages through message queues or publish-subscribe systems. Asynchronous communication is useful for decoupling services and handling background tasks or event-driven scenarios.

-

Event Sourcing and Event-driven Architecture: In event-driven architecture, microservices issue and consume events to communicate changes or trigger actions. This pattern is beneficial for building scalable, loosely coupled systems that respond to real-time events.

Domain-Driven Design (DDD)

Domain-Driven Design (DDD) is a set of principles, patterns, and techniques for designing applicatio with a focus on the domain of the problem being solved. In the context of microservices architecture, DDD plays a importantent role in helping you define the boundaries of your microservices and ensure that they align with your business domain. Here's how DDD techniques can be applied in microservices architecture:

-

Bounded Contexts:In DDD, a bounded context is a specific boundary within which a domain model is defined and applicable. In microservices, each microservice typically corresponds to a bounded context. Bounded contexts ensure that each microservice has a well-defined scope and encapsulates a specific aspect of the business domain.

-

Aggregates: Aggregates in DDD represent a cluster of domain objects treated as a single unit. In microservices, an aggregate can be considered a microservice that manages a set of related entities. Microservices encapsulate aggregates and provide APIs for manipulating them. This helps maintain data consistency and isolation.

-

Entities and Value Objects: DDD distinguishes between entities (objects with a distinct identity) and value objects (objects with no distinct identity). In microservices, entities and value objects are used to model domain concepts within the microservice's scope, helping to define data structures and behavior.

-

Context Mapping: Context mapping in DDD deals with defining relationships and interactions between bounded contexts. It helps manage the integration points between different parts of the system. In microservices architecture, context mapping is essential for specifying how microservices interact and communicate with each other, either through APIs or messaging.

Domain-Driven Design (DDD) Example

Let’s take a detailed real-world example of a microservices-based application following Domain-Driven Design (DDD) principles. This example will helps you to understand how to identify bounded contexts, define microservices, map databases per service, and see how this all ties together into a cohesive system.

Scenario: Online Retail Platform (E-Commerce System)

You are building a modern online retail platform similar to Amazon or Flipkart. The platform needs to handle:

- Product management

- Customer management

- Orders and payments

- Inventory tracking

- Notifications

- Authentication and authorization

Applying DDD: Identify Bounded Contexts

In DDD, each bounded context becomes a candidate for a microservice. Here’s how the domain breaks down:

| Bounded Context | Description |

|---|---|

| Product Catalog | Manages product details, categories, pricing |

| Customer Management | Handles user registration, profile, and address |

| Order Management | Creates and tracks customer orders |

| Inventory | Manages stock levels per product and location |

| Payment | Handles payment processing, refunds |

| Notification | Sends emails/SMS for order updates |

| Authentication | User login, token issuance, role-based access |

Microservices List with Databases

| Microservice | Responsibility | Database | Technology Stack |

|---|---|---|---|

| ProductService | Add/update product catalog, categories, pricing | SQL Server / PostgreSQL | .NET Core Web API |

| CustomerService | Manage user profile, address, contact info | PostgreSQL | .NET Core Web API |

| OrderService | Place order, track order status, order history | SQL Server | .NET Core Web API |

| InventoryService | Check and update stock, warehouse mapping | MongoDB / PostgreSQL | Node.js / .NET |

| PaymentService | Handle payments, payment status, refunds | PostgreSQL | Node.js |

| NotificationService | Send order/shipping alerts via email/SMS | No DB / Redis Queue | Node.js |

| AuthService | Register/login users, token issuance, RBAC | PostgreSQL (Keycloak DB) | Keycloak (Docker) |

Each microservice:

- Has its own isolated data store

- Manages its own business rules

- Communicates with others via REST APIs or message queues

Microservice Interactions (Example Flow)

User places an order:

- AuthService validates user token.

- OrderService creates order, validates customer and product.

- ProductService provides product pricing.

- InventoryService checks and reserves stock.

- PaymentService processes payment.

- NotificationService sends confirmation email/SMS.

- OrderService updates order status.

These services can be chained using REST (synchronous) or Kafka/RabbitMQ (asynchronous).

Databases per Microservice (DB-per-Service Pattern)

Each microservice owns its own database schema and is responsible for reading/writing to it.

| Microservice | Database Type | Reason |

|---|---|---|

| ProductService | PostgreSQL | Relational data, joins for categories |

| CustomerService | PostgreSQL | Structured customer records |

| OrderService | SQL Server | Order lifecycle with transactional guarantees |

| InventoryService | MongoDB | High write-read throughput, document model |

| PaymentService | PostgreSQL | Transactional ACID compliance |

| NotificationService | Redis (Optional) | Queueing and retry tracking |

| AuthService | PostgreSQL | Used internally by Keycloak |

Task-1: Identify Microservices for the book

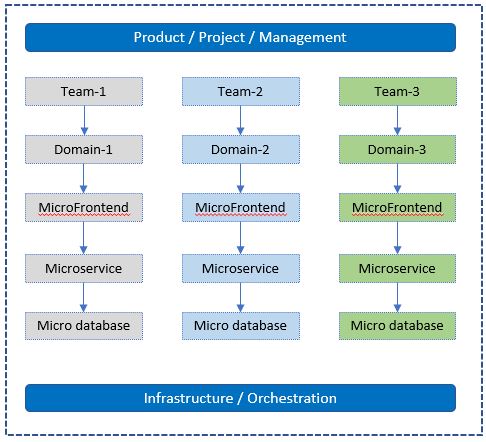

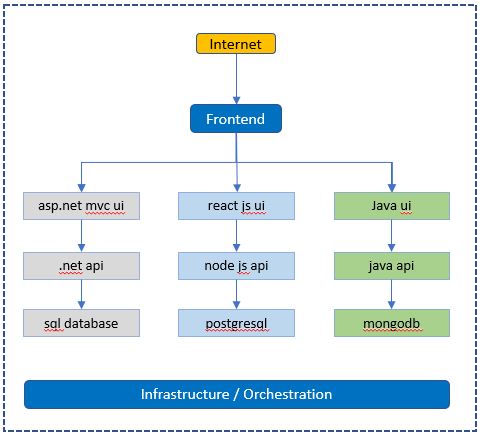

To fully explore the microservices architecture in this book, we will create several containerized microservices and microfrontend applications and couple of databases. These applications will allow us to demonstrate real-world scenarios and provide a practical understanding of microservices implementation. In this case study, we will create the following microservices, which will be developed in the upcoming labs. we have purposely selected diverse options to ensure a broader learning experience.

| Microservice/Website/Database | Technology Used | Name |

|---|---|---|

| First Microservice | .NET Core Web API (C#) | aspnet-api |

| Second Microservice | Node.js (Node) | nodejs-api |

| First Website | ASP.NET Core MVC (C#) | aspnet-app |

| Second Website | React.js (Node) | react-app |

| First Database | SQL Server | sqlserver-db |

| Second Database | PostgreSQL | postgresql-db |

| Keycloak | Identity and Access Management | keycloak-service |

| Drupal | Content Management System | drupal-service |

for example, here is how the folder structure of our Microservices and MicroFrontend Applications looks like .

Microservices/

├── aspnet-api/

│ ├── Controllers/

│ ├── Models/

│ ├── appsettings.json

│ ├── Program.cs

│ ├── Startup.cs

│ └── Dockerfile

│ └── aspnet-api.csproj

└── node-api/

├── routes/

├── models/

├── package.json

├── app.js

└── Dockerfile

Websites/

├── aspnet-app/

│ ├── Controllers/

│ ├── Models/

│ ├── Views/

│ ├── appsettings.json

│ ├── Program.cs

│ ├── Startup.cs

│ └── Dockerfile

│ └── aspnet-app.csproj

├── react-app/

│ ├── src/

│ ├── package.json

│ ├── public/

│ └── Dockerfile

│ ├── node_modules/

│ └── README.md

└── ...

Databases/

├── sqlserver-db/

│ ├── tables/

│ ├── procedures/

│ ├── views/

│ ├── functions/

│ └── triggers/

│ └── Dockerfile

|

└── postgresql-db/

├── tables/

├── procedures/

├── views/

├── functions/

|── Dockerfile

└── triggers/

Important

If you noticed, each project has its own Dockerfile, indicating that all these applications will be containerized and ready for deployment to a Kubernetes cluster.

The following diagram shows the conceptual view of the microservices environment

For example:

Task-2: Identify the List of Git Repositories Needed

Once you have determined the list of domains or microservices required for your project, it's time to analyze how they will be organized within the source control system, such as Git repositories. One important consideration is determining the number of Git repositories you need.

There are multiple ways to organize source code and pipelines in Azure DevOps Git, and the approach you choose depends on how you want to manage your source code and pipelines for your microservices architecture while ensuring ease of maintenance in the future.

In my preference, I recommend creating a separate Git repository for each domain or microservice. Within each domain, you may have multiple microservices, MicroFrontends, and databases.

For example, let's visualize how the Git structure may look:

- Organization1 (Name of your organization)

- Project1 (Name of the project)

- Repo-1 (for Domain1)

- APIs - Create one or more APIs with separate folders

- Websites - Create one or more websites with separate folders

- Databases - Create one or more databases with separate folders

- Repo-2 (for Domain2)

- APIs - Create one or more APIs with separate folders

- Websites - Create one or more websites with separate folders

- Databases - Create one or more databases with separate folders

- Repo-3 (for Domain3)

- APIs - Create one or more APIs with separate folders

- Websites - Create one or more websites with separate folders

- Databases - Create one or more databases with separate folders

- Repo-1 (for Domain1)

- Project2 (Project2)

- Repo-1 (Name of the repository under Project2)

- APIs - Create one or more APIs with separate folders

- Websites - Create one or more websites with separate folders

- Databases - Create one or more databases with separate folders

- Repo-1 (Name of the repository under Project2)

- Project1 (Name of the project)

Repeat this structure as the organization grows and new projects or domains are introduced.

By following this approach, each domain or microservice will have its dedicated Git repository, providing a clear separation and organization of the source code and related artifacts. This structure facilitates easier maintenance, collaboration, and version control.

Remember, this is just a sample structure, and you can adapt it based on your organization's specific needs and preferences.

Visual representation of a sample DevOps Git structure:

Organization1

└── Project1

├── Repo-1 (Microservice-1)

│ ├── APIs

│ ├── Websites

│ └── Databases

├── Repo-2 (Microservice-2)

│ ├── APIs

│ ├── Websites

│ └── Databases

├── Repo-3 (Microservice-3)

│ ├── APIs

│ ├── Websites

│ └── Databases

Project2

└── Repo-1

├── APIs

├── Websites

└── Databases

By adopting this Git structure, you can effectively manage and scale your microservices projects while ensuring a clear and organized source control system.

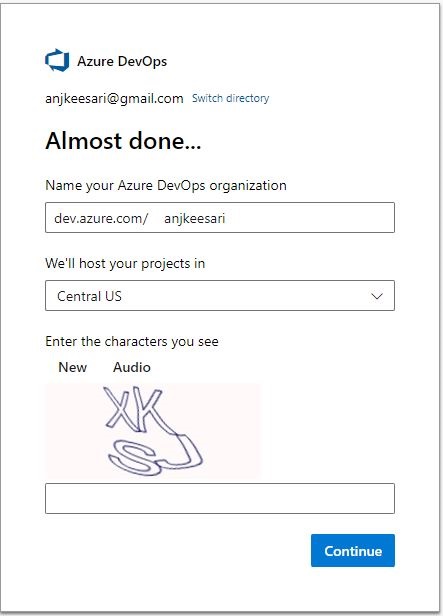

Task-3: Create new Azure DevOps Organization

With the planning and preparation of your Microservices application complete, the next step is to create a DevOps organization where you can manage the lifecycle of your projects.

To create a new Azure DevOps organization, follow these steps:

- Sign in to Azure DevOps. - https://dev.azure.com

- Click on

New organizationin the left nav.

- Enter name of the Organization and create new organization.

Once you have completed these steps, you will have a new Azure DevOps organization that is ready for use. You can then invite members to join your organization and start creating new projects.

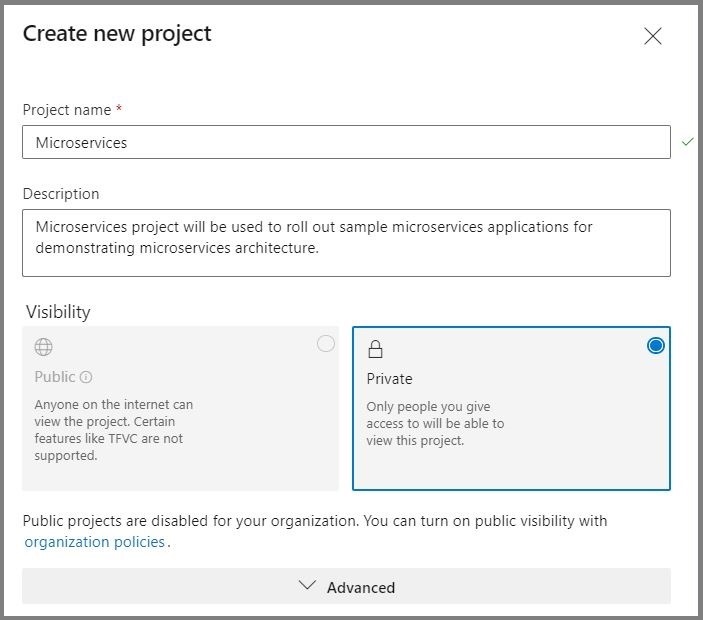

Task-4: Create new Azure DevOps Project

You need a new project in Azure DevOps to manage your source code and other project related activities.

Follow these steps to create a new project in Azure DevOps:

-

Sign in to the Azure DevOps website https://dev.azure.com/ with your Azure DevOps account.

-

Click on the

Create a projectbutton. -

Enter a name for your project and select a process template. The process template determines the default work item types, source control repository, and other settings for your project.

-

Click the

Create projectbutton to create your new project. -

Follow the screen to configure your project settings, including source control, work item types, and team members.

-

When you are finished, click the

Createbutton to complete the project creation process.

For example:

Project Name - Microservices

Description - Microservices project will be used to roll out sample microservices applications for demonstrating microservices architecture.

We have created new organization in azure DevOps and created new project so that we can start working on containerized microservices applications in the next labs.

References

Chapter-2: Exploring Docker Fundamentals

Overview

In this article, we'll explore the basics of Docker, which are like building blocks for understanding how containers work. Whether you're an experienced coder or just starting out, grasping these basics is essential for easily deploying applications in containers. These core concepts will come in handy as you continue your learning journey with docker.

What is Docker?

Docker is a powerful platform that simplifies the process of developing, shipping, and running applications. Docker uses a technology known as containerization to encapsulate an application and its dependencies into a self-contained unit called a container. These containers are lightweight, portable, and consistent across different environments.

Why use Docker?

Docker simplifies the development, deployment, and management of applications, offering an adaptable solution for modern software development practices. Its popularity comes from from its ability to address challenges related to consistency, scalability, and efficiency in the software development lifecycle.

Docker has become increasingly popular in the software development and IT industry due to its numerous advantages. Here are some key benefits of using Docker:

-

Portability: Docker containers encapsulate applications and their dependencies, ensuring consistency across different environments. This portability eliminates the common problem of "it works on my machine" and facilitates seamless deployment across various systems.

-

Isolation: Containers provide a lightweight and isolated environment for applications. Each container runs independently, preventing conflicts between dependencies and ensuring that changes made in one container do not affect others.

-

Efficiency: Docker's containerization technology enables efficient resource utilization. Containers share the host OS kernel, making them lightweight compared to traditional virtual machines. This results in faster startup times and improved performance.

-

Scalability: Docker makes it easy to scale applications horizontally by running multiple instances of containers. This scalability allows developers to change the workloads and ensures optimal resource utilization.

-

Microservices architecture: Docker is integral to the microservices architecture, where applications are composed of small, independently deployable services. Containers facilitate the development, deployment, and scaling of microservices, enabling agility and ease of management.

-

DevOps integration: Docker aligns well with DevOps practices by promoting collaboration between development and operations teams. Containers can be easily integrated into continuous integration and continuous deployment (CI/CD) pipelines, streamlining the software delivery process.

-

Community support: Docker's community offers lot of pre-made tools and solutions, helping developers work faster and learn from others.

-

Security: Docker provides built-in security features, such as isolation and resource constraints, to enhance application security.

-

Cross-platform compatibility: Docker containers can run on various operating systems, including Linux, Windows, and macOS. This cross-platform compatibility is beneficial for teams working in heterogeneous environments.

Docker concepts

Understanding these basic concepts is essential for effectively working with Docker and leveraging its advantages in terms of portability, scalability, and consistency across different environments. Here are basic concepts of Docker:

-

Containerization Containerization is a technology that allows you to package an application and its dependencies, including libraries and configuration files, into a single container image.

-

Images An image is a lightweight, standalone, and executable package that includes everything needed to run a piece of software, including the code, runtime, libraries, and system tools. Docker images are used to create containers. They are built from a set of instructions called a Dockerfile.

-

Dockerfile A Dockerfile is a text file that contains a set of instructions for building a Docker image. It specifies the base image, adds dependencies, copies files, and defines other settings necessary for the application to run.

-

Containers Containers are instances of Docker images. They run in isolated environments, ensuring that the application behaves consistently across different environments. Containers share the host OS kernel but have their own file system, process space, and network interfaces.

-

Registries Docker images can be stored and shared through registries. The default registry is Docker Hub, but private registries can also be used. Registries allow versioning, distribution, and collaboration on Docker images.

-

Docker compose Docker Compose is a tool for defining and running multi-container Docker applications. It allows you to define a multi-container application in a single file, specifying services, networks, and volumes.

-

Docker engine Docker Engine is the core component that manages Docker containers. It includes a server, REST API, and a command-line interface (CLI). The Docker daemon runs on the host machine, and the Docker CLI communicates with it to build, run, and manage containers.

-

Volumes Volumes provide a way for containers to persist data outside their lifecycle. They can be used to share data between containers or to persist data even if a container is stopped or removed.

-

Networking Docker provides networking capabilities that allow containers to communicate with each other or with the external world. Containers can be connected to different networks, and ports can be mapped between the host and the containers.

Container orchestration

Whether managing a small cluster or a large-scale production environment, adopting container orchestration is crucial for containerized applications. Here are some container orchestrations:

-

Kubernetes: Kubernetes is the most widely adopted container orchestration platform. It automates the deployment, scaling, and management of containerized applications, providing a robust and extensible framework.

-

Docker Swarm: Docker Swarm is a native clustering and orchestration solution provided by Docker. While it may not be as feature-rich as Kubernetes, it offers simplicity and seamless integration with Docker.

-

Amazon ECS: Amazon Elastic Container Service (ECS) is a fully managed container orchestration service provided by AWS. It integrates with other AWS services and is suitable for users already utilizing the AWS ecosystem.

-

Azure Kubernetes Service (AKS): AKS is a managed Kubernetes service offered by Microsoft Azure. It simplifies the deployment and management of Kubernetes clusters in the Azure cloud.

Docker Desktop

Docker Desktop is a powerful tool that provides a user-friendly interface and environment for developing, building, and testing applications using Docker containers on local machine.

Docker Desktop provides a convenient environment for developers to work with containers on their personal machines.

Install Docker

Here are the steps to install Docker on a different operating systems:

Windows:

Download Docker Desktop:

- Visit the Docker Desktop for Windows page.

- Click on the "Download for Windows" button.

- Follow the on-screen instructions to download the installer.

Install Docker Desktop:

- Run the installer that you downloaded.

- Follow the installation wizard, accepting the default options.

- The installer may require you to restart your computer.

Enable Hyper-V (Windows 10 Pro/Enterprise):

- If you're running Windows 10 Pro or Enterprise, Docker Desktop will use Hyper-V for virtualization. Ensure that Hyper-V is enabled in the Windows Features.

Start Docker Desktop:

- Once installed, start Docker Desktop from the Start Menu.

- The Docker icon will appear in the system tray when Docker Desktop is running.

macOS:

Download Docker Desktop:

- Visit the Docker Desktop for Mac page.

- Click on the "Download for Mac" button.

- Follow the on-screen instructions to download the installer.

Install Docker Desktop:

- Run the installer that you downloaded.

- Drag the Docker icon to the Applications folder.

- Launch Docker from Applications.

Start Docker Desktop:

- Once installed, Docker Desktop should start automatically.

- The Docker icon will appear in the menu bar when Docker Desktop is running.

Verify Docker install:

To verify that Docker is installed correctly, open a terminal and run the following command:

If you notice this, it indicates that your Docker is not in a running status.

error during connect: this error may indicate that the docker daemon is not running: Get "http://%2F%2F.%2Fpipe%2Fdocker_engine/v1.24/version": open //./pipe/docker_engine: The system cannot find the file specified.

Client:

Cloud integration: v1.0.35

Version: 24.0.2

API version: 1.43

Go version: go1.20.4

Git commit: cb74dfc

Built: Thu May 25 21:53:15 2023

OS/Arch: windows/amd64

Context: default

After Docker desktop is started and if everything is set up correctly, you should see following message indicating that your Docker installation is working.

Client:

Cloud integration: v1.0.35

Version: 24.0.2

API version: 1.43

Go version: go1.20.4

Git commit: cb74dfc

Built: Thu May 25 21:53:15 2023

OS/Arch: windows/amd64

Context: default

Server: Docker Desktop 4.21.1 (114176)

Engine:

Version: 24.0.2

API version: 1.43 (minimum version 1.12)

Go version: go1.20.4

Git commit: 659604f

Built: Thu May 25 21:52:17 2023

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.6.21

GitCommit: 3dce8eb055cbb6872793272b4f20ed16117344f8

runc:

Version: 1.1.7

GitCommit: v1.1.7-0-g860f061

docker-init:

Version: 0.19.0

GitCommit: de40ad0

Docker is now installed on your machine, and you can start using it to containerize your applications.

Docker Commands

For more comprehensive details on Docker commands, please refer to the Docker Commands Cheat Sheet on our website.

Conclusion

Docker and containerization have changed the way we build and use application development. Now that you understand the basics of Docker, you're ready to dive deeper. Docker is straightforward and flexible, making it a great tool for developers. It ensures that your application works the same way in different situations, keeps things separate, and easily grows with your needs. So, go ahead and start your journey with containers.

References

Chapter-3: Getting Started with Docker

Docker is a platform for developing, shipping, and running applications in containers. Containers allow you to package an application and its dependencies into a single unit, making it easy to deploy consistently across different environments.

In this lab, I will guide you through the process of creating Docker images, containers, and finally accessing the sample application in the web browser.

If you are new to Docker and want to learn its fundamental concepts, please visit our website. - Exploring Docker Fundamentals

Objective

In this exercise, our objective is to accomplish and learn the following tasks:

- Step 1: Get the Sample Application

- Step 2: Create Docker Image

- Step 3: Create Docker Container

- Step 4: Port Binding

- Step 5: Browse the Frontend Application

- Step 6: View Docker Logs

- Step 7: Docker Commands

Prerequisites

Before starting this lab, ensure you have the following prerequisites in place:

- Visual Studio Code : - Visual Studio Code Downloads.

- Docker desktop : - Docker Downloads.

- Git Client tool: - Git Downloads.

Verify the docker installation by running following commands:

Step 1: Get the Sample Application

To begin, you'll need a sample application to work with. You can either use an existing application or create a simple one.

In this task, we'll start by searching for an image to run locally. For example, we'll use the Nginx image from Docker Hub using the following URL: Docker Hub Search

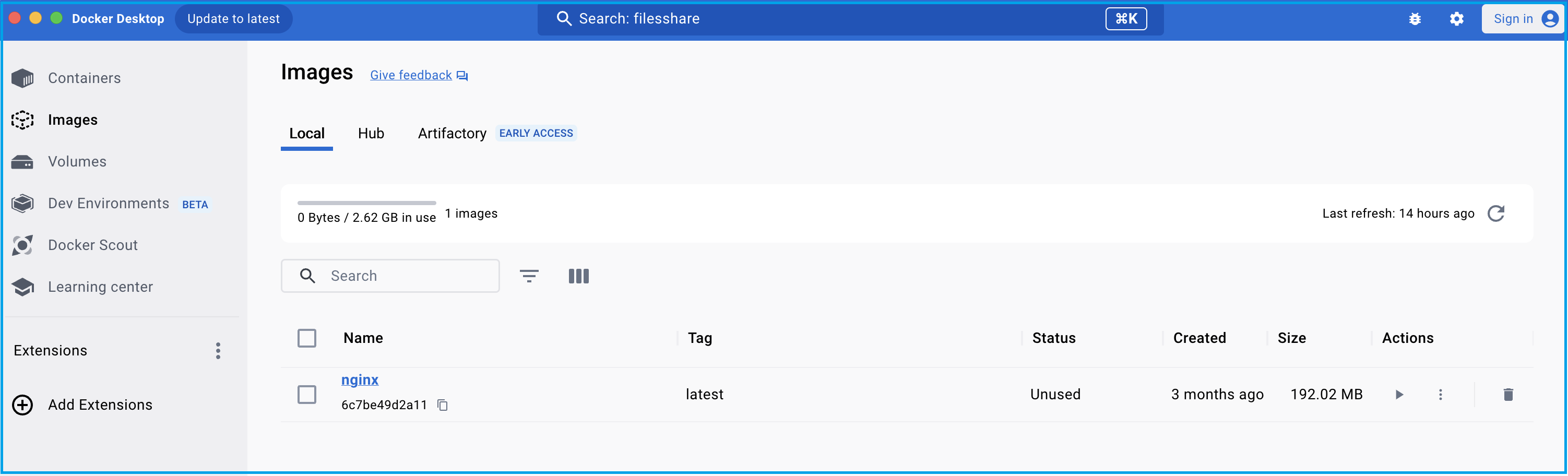

Step 2: Create Docker Image

Now that we've identified the image we want to use, let's pull it from Docker Hub into our local Docker Desktop and run it locally.

docker pull nginx

# output

Using default tag: latest

latest: Pulling from library/nginx

a5573528b1f0: Pull complete

8897d65c8417: Pull complete

fbc138d1d206: Pull complete

06f386eb9182: Pull complete

aeb2f3db77c3: Pull complete

64fb762834ec: Pull complete

e5a7e61f6ff4: Pull complete

Digest: sha256:4c0fdaa8b6341bfdeca5f18f7837462c80cff90527ee35ef185571e1c327beac

Status: Downloaded newer image for nginx:latest

docker.io/library/nginx:latest

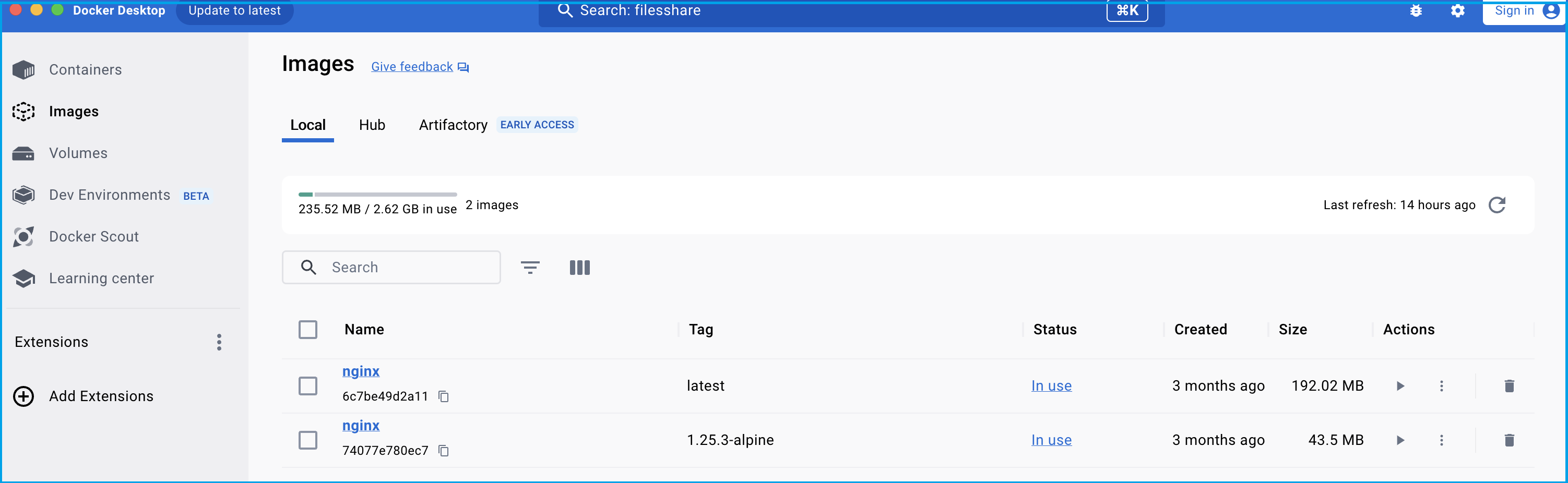

List Docker images from Docker Desktop:

docker images

# output

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx latest 6c7be49d2a11 2 months ago 192MB

Step 3: Create Docker Container

In this step, we'll create a Docker container by running the docker run command for the image.

docker run nginx

# output

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: info: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Sourcing /docker-entrypoint.d/15-local-resolvers.envsh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2024/01/15 04:27:56 [notice] 1#1: using the "epoll" event method

2024/01/15 04:27:56 [notice] 1#1: nginx/1.25.3

2024/01/15 04:27:56 [notice] 1#1: built by gcc 12.2.0 (Debian 12.2.0-14)

2024/01/15 04:27:56 [notice] 1#1: OS: Linux 6.3.13-linuxkit

2024/01/15 04:27:56 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:1048576

2024/01/15 04:27:56 [notice] 1#1: start worker processes

2024/01/15 04:27:56 [notice] 1#1: start worker process 29

2024/01/15 04:27:56 [notice] 1#1: start worker process 30

2024/01/15 04:27:56 [notice] 1#1: start worker process 31

2024/01/15 04:27:56 [notice] 1#1: start worker process 32

2024/01/15 04:27:56 [notice] 1#1: start worker process 33

Open a new terminal and run the following command to list containers:

dockder ps

# output

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

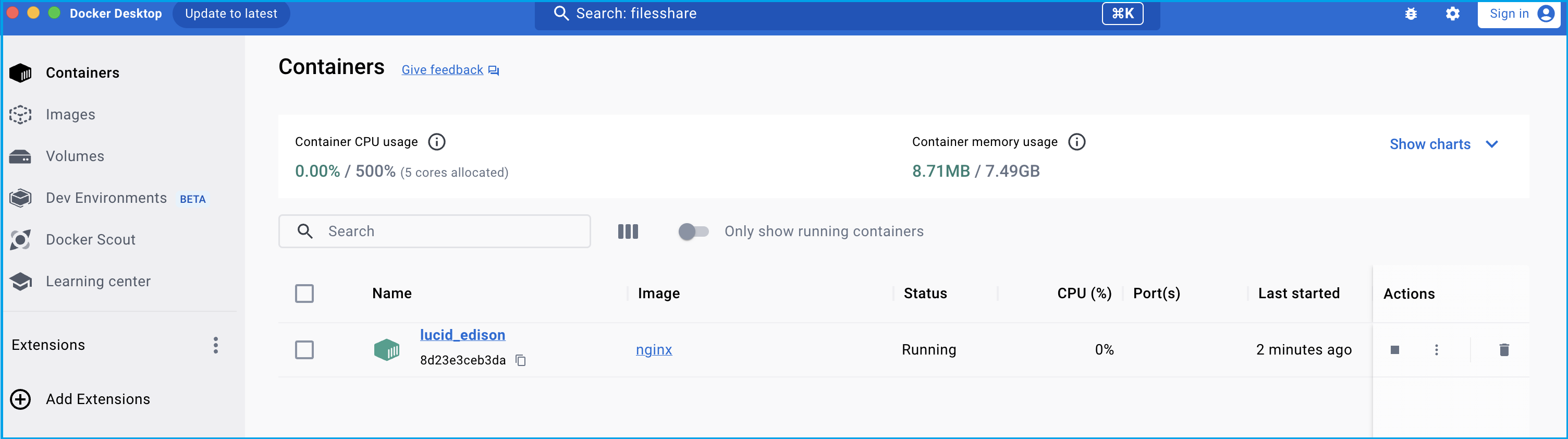

8d23e3ceb3da nginx "/docker-entrypoint.…" 3 minutes ago Up 2 minutes 80/tcp lucid_edison

You can watch the container logs in the first terminal.

To exit the container, press Ctrl + C.

2024/01/15 04:28:00 [notice] 1#1: signal 28 (SIGWINCH) received

2024/01/15 04:28:00 [notice] 1#1: signal 28 (SIGWINCH) received

2024/01/15 04:30:50 [notice] 1#1: signal 28 (SIGWINCH) received

2024/01/15 04:30:50 [notice] 1#1: signal 28 (SIGWINCH) received

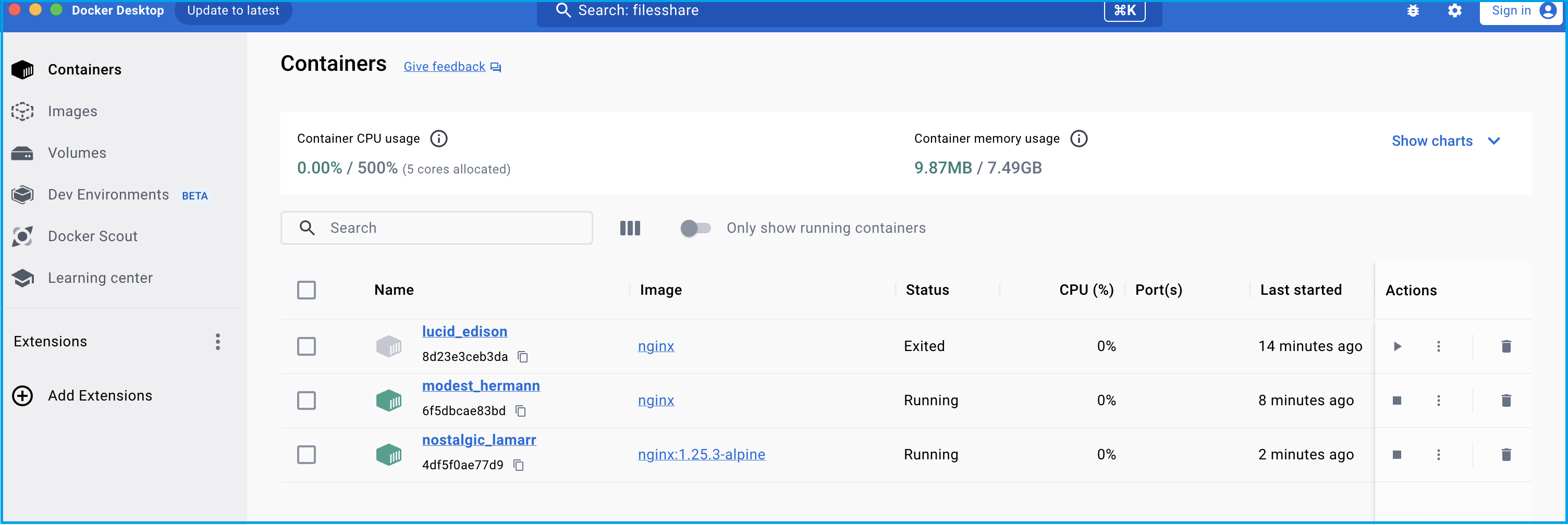

docker run -d nginx

# output

6f5dbcae83bd3ac6a0ea8bdb45f753bf72a723179503d4b4ebce4ddeae2378e2

# Now, you can run the following command to see the list of running containers:

docker ps

Alternatively, you can also run the image directly from Docker Hub. Here are the example commands:

docker run nginx:1.25.3-alpine

# output

Unable to find image 'nginx:1.25.3-alpine' locally

1.25.3-alpine: Pulling from library/nginx

2c03dbb20264: Pull complete

0ed066aadd11: Pull complete

4eeb1ddd7404: Pull complete

9ba8827f116b: Pull complete

2bc60ecca38f: Pull complete

11d942ec6258: Pull complete

fed1b403bb45: Pull complete

392e92e0a8e8: Pull complete

Digest: sha256:a59278fd22a9d411121e190b8cec8aa57b306aa3332459197777583beb728f59

Status: Downloaded newer image for nginx:1.25.3-alpine

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: info: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Sourcing /docker-entrypoint.d/15-local-resolvers.envsh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2024/01/15 04:39:23 [notice] 1#1: using the "epoll" event method

2024/01/15 04:39:23 [notice] 1#1: nginx/1.25.3

2024/01/15 04:39:23 [notice] 1#1: built by gcc 12.2.1 20220924 (Alpine 12.2.1_git20220924-r10)

2024/01/15 04:39:23 [notice] 1#1: OS: Linux 6.3.13-linuxkit

2024/01/15 04:39:23 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:1048576

2024/01/15 04:39:23 [notice] 1#1: start worker processes

2024/01/15 04:39:23 [notice] 1#1: start worker process 29

2024/01/15 04:39:23 [notice] 1#1: start worker process 30

2024/01/15 04:39:23 [notice] 1#1: start worker process 31

2024/01/15 04:39:23 [notice] 1#1: start worker process 32

2024/01/15 04:39:23 [notice] 1#1: start worker process 33

docker ps

# output

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4df5f0ae77d9 nginx:1.25.3-alpine "/docker-entrypoint.…" About a minute ago Up About a minute 80/tcp nostalgic_lamarr

6f5dbcae83bd nginx "/docker-entrypoint.…" 6 minutes ago Up 6 minutes 80/tcp modest_hermann

docker images from docker desktop

docker containers from docker desktop

you can also run following commnds to see images and containers running locally.

docker image ls

# output

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx latest 6c7be49d2a11 2 months ago 192MB

nginx 1.25.3-alpine 74077e780ec7 2 months ago 43.5MB

docker container ls

# output

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4df5f0ae77d9 nginx:1.25.3-alpine "/docker-entrypoint.…" 5 minutes ago Up 5 minutes 80/tcp nostalgic_lamarr

6f5dbcae83bd nginx "/docker-entrypoint.…" 10 minutes ago Up 10 minutes 80/tcp modest_hermann

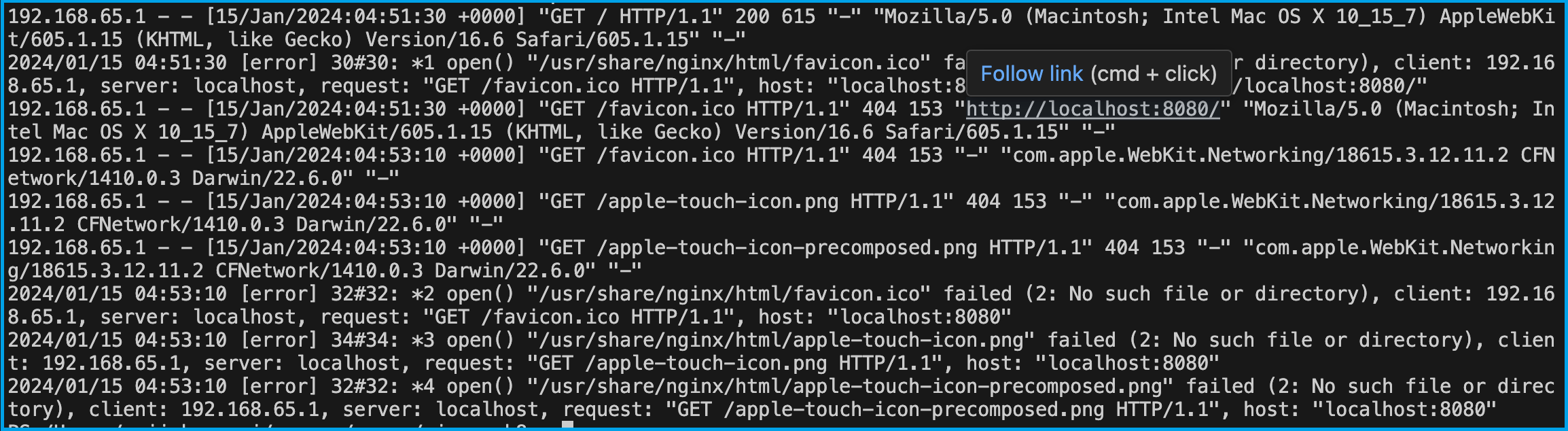

Step 4: Port Binding

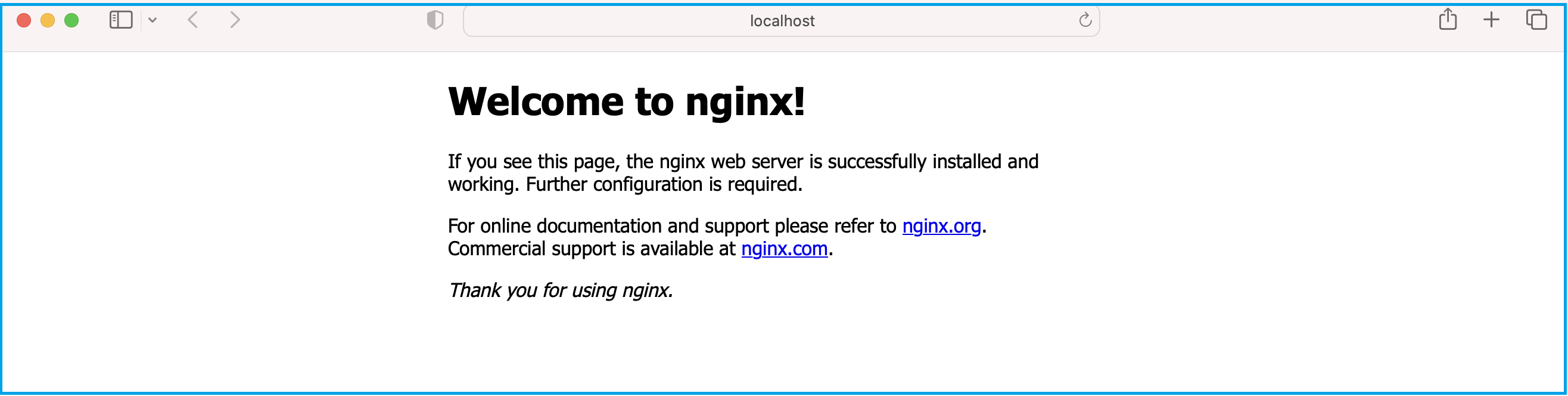

Your application is now running inside the Docker container, and you've mapped port 8080 from the container to your host. This means you can access your application using http://localhost:8080 in your web browser.

docker run -d -p 8080:80 nginx:1.25.3-alpine

# output

f21ada11af57b799c9b834d0a6c8e6e1628c6289d64cf65fdc0968cbe94500fd

Step 5: Browse the Frontend Application

Open your web browser and navigate to http://localhost:8080 to access your Node.js application running in the Docker container.

Step 6: View Docker Logs

To view the logs of your running container, use the following command:

This will display the logs generated by your application.

docker ps -a

# output

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f21ada11af57 nginx:1.25.3-alpine "/docker-entrypoint.…" 7 minutes ago Up 7 minutes 0.0.0.0:8080->80/tcp nifty_goldberg

4df5f0ae77d9 nginx:1.25.3-alpine "/docker-entrypoint.…" 19 minutes ago Up 19 minutes 80/tcp nostalgic_lamarr

6f5dbcae83bd nginx "/docker-entrypoint.…" 25 minutes ago Exited (0) 10 minutes ago modest_hermann

8d23e3ceb3da nginx "/docker-entrypoint.…" 31 minutes ago Exited (0) 25 minutes ago lucid_edison

Naming the Container:

You can also name the container using the --name flag:

docker run --name nginx-app -d -p 8080:80 nginx:1.25.3-alpine

# output

58e464680a8da16b717171732fb1b67b678b1c8efb115f9adad8d3257c6cc875

# run following command to see the name

docker ps

docker logs nginx-app

Step 7: Docker Commands

For more comprehensive details on Docker commands, please refer to the Docker Commands Cheat Sheet on our website.

Conclusion

In summary, this guide introduced you to Docker, a tool that simplifies how we build, package, and run applications. We've covered essential steps, like getting an example application (nginx), creating Docker images and running containers. We've also learned how to manage ports, access apps in web browsers, and check what's happening behind the scenes with Docker logs. Plus, we touched on some common Docker commands.

Docker is a powerful tool for containerization, enabling you to package and deploy applications with ease. By following these steps, you've created your first Docker application.

References

Chapter-4: Create Your First Microservice with .NET Core Web API

Introduction

Welcome to the first lab in our Microservices chapter. In this lab, we will look into creating a simple RESTful service using the ASP.NET Core Web API project template.

This lab will demonstrate the process of building a RESTful service and generating a docker container using Dockerfile. By following this example, you will learn the fundamentals of creating RESTful APIs using the ASP.NET Core.

Technical Scenario

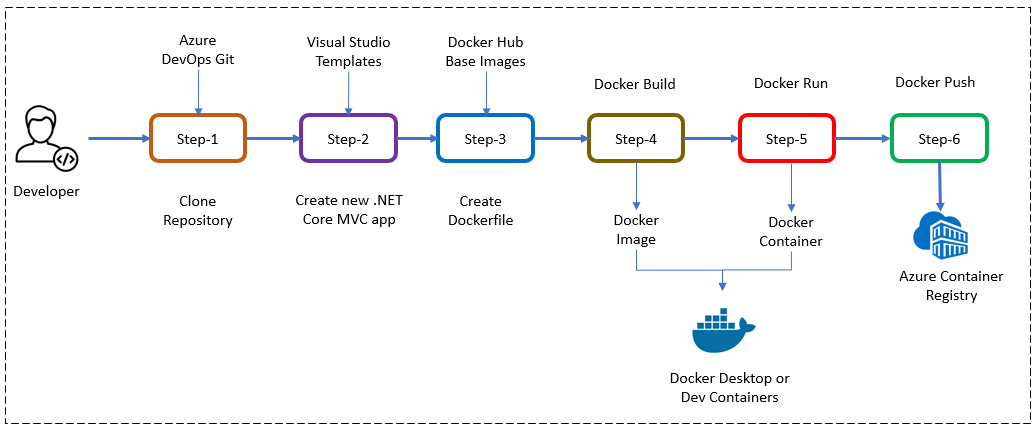

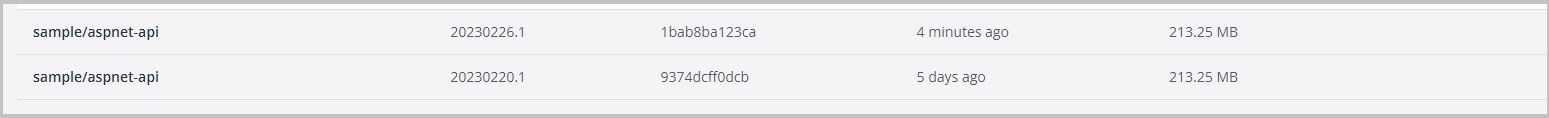

As a Backend (BE) developer, you have been tasked with creating a RESTful service using .NET Core Web API, which is one of the services on our microservices list. This lab will serve as your introduction to the Microservices Architecture, starting with the basics of setting up a repository, creating a small project, and ultimately containerizing the microservice you build. The containerized microservice will then be pushed to the Azure Container Registry (ACR).

The primary objective of this lab is to prepare an application for deployment on Kubernetes. The microservices you create in this lab will be utilized in subsequent labs, such as the creation of DevOps pipelines or the deployment to Azure Kubernetes Services (AKS). By completing this lab, you will gain a foundational understanding of how microservices can be developed, containerized, and integrated into a Kubernetes environment.

Objective

In this exercise, our objective is to accomplish and learn the following tasks:

- Step-1: Create a new repo in azure DevOps

- Step-2: Clone the repository

- Step-3: Create a new Web API project

- Step-4: Test Web API project

- Step-5: Add Dockerfiles to the project

- Step-6: Build & Test docker container locally

- Step-7: Publish docker container to ACR

Prerequisites

Before starting this lab, ensure you have the following prerequisites in place:

- An Organization in Azure DevOps

- A Project in Azure DevOps

- Create Repository permission

- Git client tool

- Download and install software for .NET development

- Docker and the VS Code Docker extension

- Azure Container Registry (ACR)

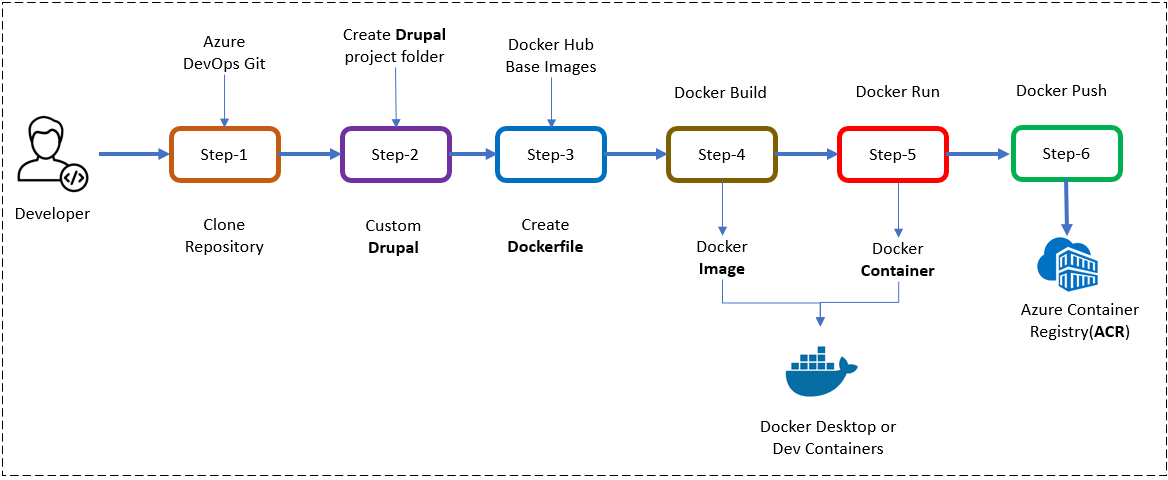

Architecture Diagram

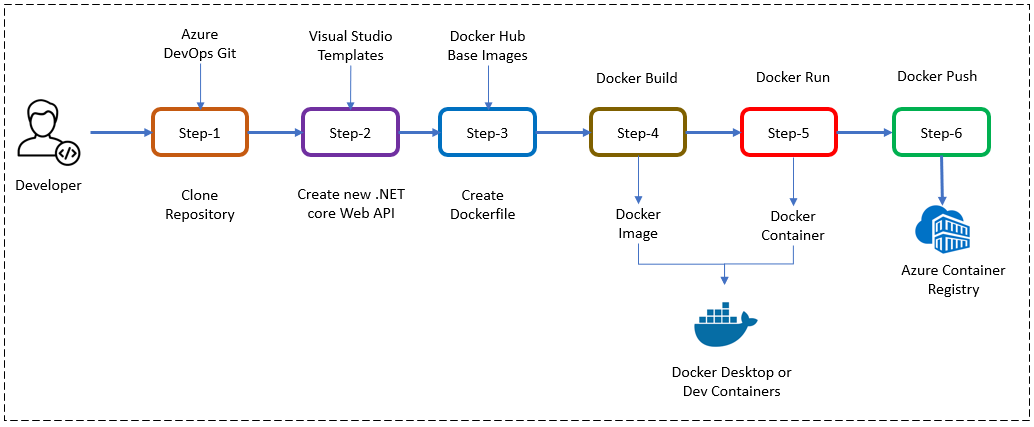

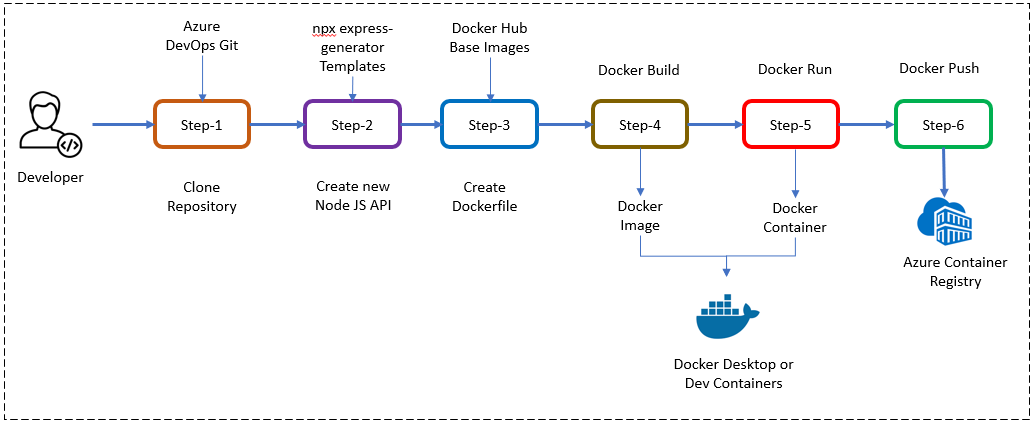

The following diagram shows the high level steps to create the Restful service using .NET Core.

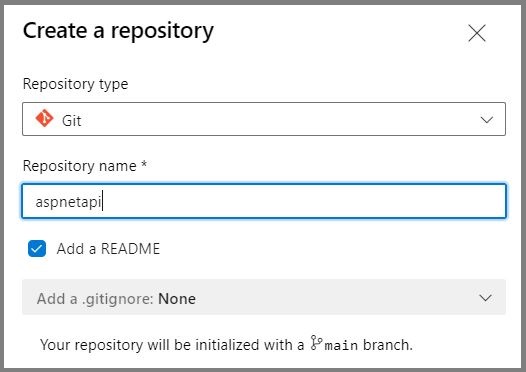

Step-1: Create a new repo in azure DevOps

We will create a new repository in Azure DevOps to store our project code and related files.

To create a new repository in Azure DevOps, follow these steps:

- Login into azure DevOps - Azure DevOps

- Select the project where we want to create the repo

- Click on

Reposleft nav link - From the repo drop-down, select

New repository - In the

Create a new repositorydialog, verify that Git is the repository type and enter a name for the new repository. - You can also add a README and create a

.gitignorefor the type of code you plan to manage in the repo. - I'd prefer to use lower case for all repos (one of the best practice)

- Repo name -

aspnetapi

- Repo name -

Best-practice

When creating repositories in Azure DevOps, it is recommended to use lower case for all repository names. Using lower case consistently throughout your repositories helps maintain consistency, readability, and ease of navigation within your projects.

By adhering to this best practice, you ensure that your repository names are uniform and standardized, regardless of the specific domain or microservice they represent. This practice promotes clarity and reduces the chances of confusion or inconsistencies when working with multiple repositories within your organization.

For example:

Step-2: Clone the repo from azure DevOps

After creating the repository, we will clone it locally to establish a local working copy of the project.

To clone a repository from Azure DevOps, you will need to have the Git client installed on your local machine. follow these steps to clone the source code locally:

-

Sign in to the Azure DevOps website Azure DevOps Login with your Azure DevOps account.

-

Navigate to the project that contains the repository you want to clone.

-

Click on the

Repostab in the navigation menu. -

Find the repository you want to clone and click on the

Clonebutton. -

Copy the URL of the repository.

-

Open a terminal window or command prompt on your local machine, and navigate to the directory where you want to clone the repository.

-

Run the following command to clone the repository:

When prompted, enter your Azure DevOps credentials.

The repository will be cloned to your local machine, and you can start working with the code.

Examples:

C:\Users\anji.keesari>cd C:\Source\Repos

C:\Source\Repos>git clone https://keesari.visualstudio.com/Microservices/_git/aspnetapi

or

# cloning from main branch for the first time

git clone git clone https://keesari.visualstudio.com/Microservices/_git/aspnetapi -b main C:\Source\Repos\Microservices\aspnetapi

# cloning from feature branches

git clone https://keesari.visualstudio.com/Microservices/_git/aspnetapi -b develop C:\Source\Repos\Microservices\aspnetapi

Please refer to our Git Cheat-Sheet, which provides a comprehensive list of Git commands and their usage.

Step-3: Create a new .NET Core Web API project

Using the .NET Core Web API template, we will create a new project that serves as the foundation for our RESTful service.

We will be using Visual Studio Code instead of Visual Studio to make things faster and easy and save time and money.

Best-practice

I recommend using Visual Studio Code (VS Code) as your preferred development environment instead of Visual Studio.

Visual Studio Code is a lightweight, cross-platform code editor that offers powerful features and extensions tailored for modern development workflows. It provides a streamlined and customizable interface, allowing you to focus on coding without unnecessary overhead.

To create a new .NET Core Web API project, you will need to have the .NET Core SDK installed on your machine. You can download the .NET Core SDK from the .NET website Download .NET.

Once you have the .NET Core SDK installed, follow these steps to create a new .NET Core Web API project:

- Open a terminal window and navigate to the directory where you want to create your project.

- Run the